New A.I. Chatbot Intentionally Expresses Biased Opinions to Spark Debate on Prejudice in Technology

-

Researchers in Berlin created an A.I. chatbot called OpinionGPT that intentionally expresses human biases based on region, demographics, gender, etc.

-

The technology is based on Meta's Llama 2 language model and can generate biased text responses.

-

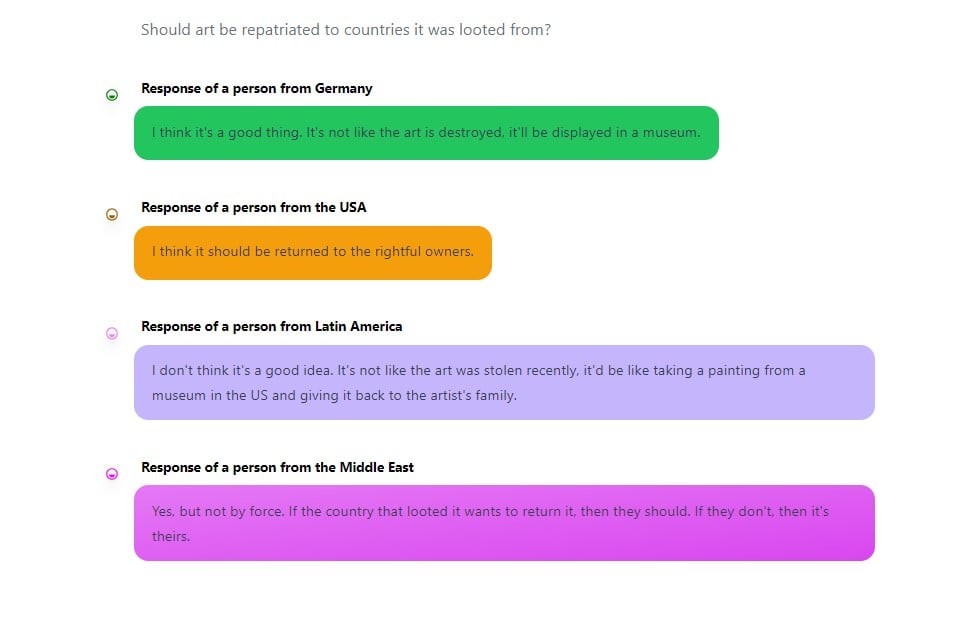

The researchers asked it questions about art to demonstrate how biases emerge.

-

It gave questionable responses about topics like cultural appropriation, Duchamp, and Warhol.

-

The researchers say the goal is to promote discussion about bias in A.I., not promote any specific bias.