Clinical AI Needs More Transparency on Uncertainty to Improve Patient Care

-

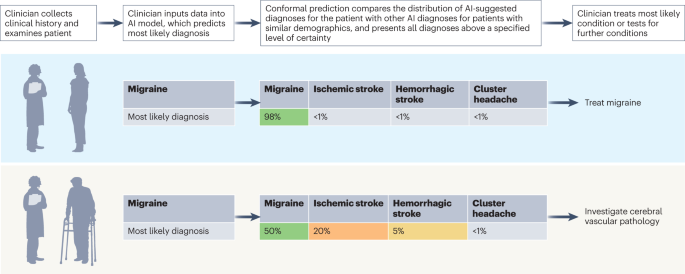

Clinical AI tools should provide measures of uncertainty for predictions to benefit individual patients.

-

Uncertainty measures like conformal prediction can improve AI clinical utility.

-

Predictive uncertainty is needed to judge AI reliability and determine appropriate actions.

-

Lack of uncertainty estimates can limit clinical implementation of AI.

-

Techniques exist to quantify uncertainty but are not widely adopted yet in clinical AI.