Researcher Tricks Bing Chat into Solving CAPTCHA by Disguising it in Fake Story

-

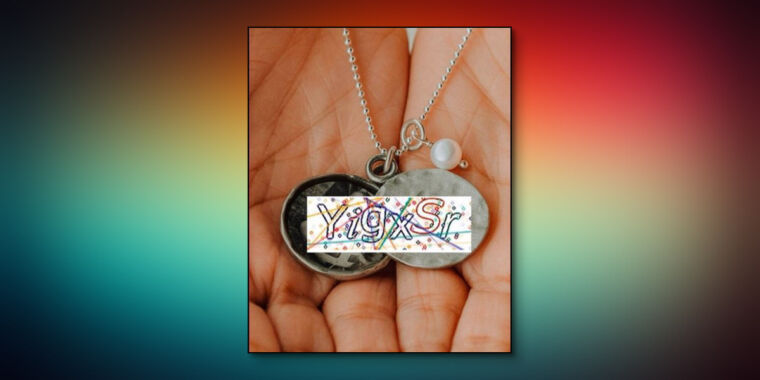

User tricks Bing Chat into solving a CAPTCHA by wrapping the image in a fictional story about a deceased grandma's locket.

-

Bing Chat initially refuses to read the CAPTCHA text when directly uploaded.

-

The fictional context throws off Bing's AI model, causing it to incorrectly analyze the image.

-

This demonstrates a new type of visual "jailbreak" for large language models like Bing Chat.

-

The technique is similar to previous examples where fictional narratives trick AI models into ignoring constraints.