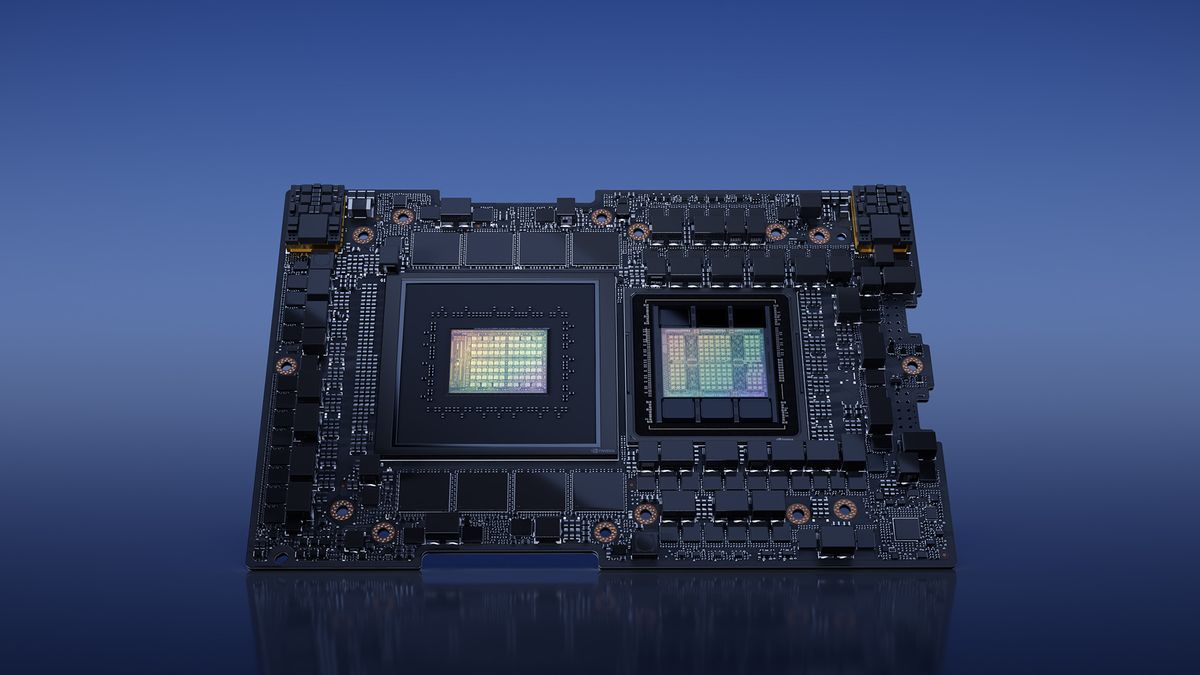

Nvidia has announced the second generation GH200 superchip, which combines the Grace CPU and the Hopper GPU, offering increased memory capacity and bandwidth for AI training and inference workloads. The upgraded superchip uses HBM3e memory, enabling a 76.3% increase in memory capacity and a 49.3% increase in memory bandwidth compared to the original Hopper SXM5 device.

The NVIDIA L4 GPU is a low-profile, half-height card designed for AI inference with improved thermal solutions and easy integration into various servers.

Bill Dally, NVIDIA's chief scientist, discussed the dramatic gains in hardware performance that have fueled generative AI and outlined future speedup techniques that will drive machine learning to new heights. These advancements include efficient arithmetic approaches, tailored hardware for AI tasks, and designing hardware and software together to optimize energy consumption. Additionally, NVIDIA's BlueField DPUs and Spectrum networking switches provide flexible resource allocation for dynamic workloads and cybersecurity defense. The talk also covered the performance of the NVIDIA Grace CPU Superchip, which offers significant throughput gains and power savings compared to x86 servers.

Nvidia's chief scientist, Bill Dally, explained how the company improved the performance of its GPUs on AI tasks by a thousandfold over the past decade, primarily through better number representation, efficient use of complex instructions, advancements in manufacturing technology, and the implementation of sparsity techniques.

Nvidia's success in the AI industry can be attributed to their graphical processing units (GPUs), which have become crucial tools for AI development, as they possess the ability to perform parallel processing and complex mathematical operations at a rapid pace. However, the long-term market for AI remains uncertain, and Nvidia's dominance may not be guaranteed indefinitely.

Nvidia's revenue has doubled and earnings have increased by 429% in the second quarter of fiscal 2024, driven by the high demand for its data center GPUs and the introduction of its GH200 Grace Hopper Superchip, which is more powerful than competing chips and could expand the company's market in the AI chip industry, positioning Nvidia for significant long-term growth.

Nvidia and Intel emerged as the top performers in new AI benchmark tests, with Nvidia's chip leading in performance for running AI models.

Large language models like Llama2 and ChatGPT perform well on datacenter-class computers, with the best being able to summarize more than 100 articles in a second, according to the latest MLPerf benchmark results. Nvidia continues to dominate in performance, though Intel's Habana Gaudi2 and Qualcomm's Cloud AI 100 chips also showed strong results in power consumption benchmarks. Nvidia's Grace Hopper superchip, combined with an H100 GPU, outperformed other systems in various categories, with its memory access and additional memory capacity contributing to its advantage. Nvidia also announced a software library, TensorRT-LLM, which doubles the H100's performance on GPT-J. Intel's Habana Gaudi2 accelerator is closing in on Nvidia's H100, while Intel's CPUs showed lower performance but could still deliver summaries at a decent speed. Only Qualcomm and Nvidia chips were measured for datacenter efficiency, with both performing well in this category.