The main topic of the article is the development of AI language models, specifically ChatGPT, and the introduction of plugins that expand its capabilities. The key points are:

1. ChatGPT, an AI language model, has the ability to simulate ongoing conversations and make accurate predictions based on context.

2. The author discusses the concept of intelligence and how it relates to the ability to make predictions, as proposed by Jeff Hawkins.

3. The article highlights the limitations of AI language models, such as ChatGPT, in answering precise and specific questions.

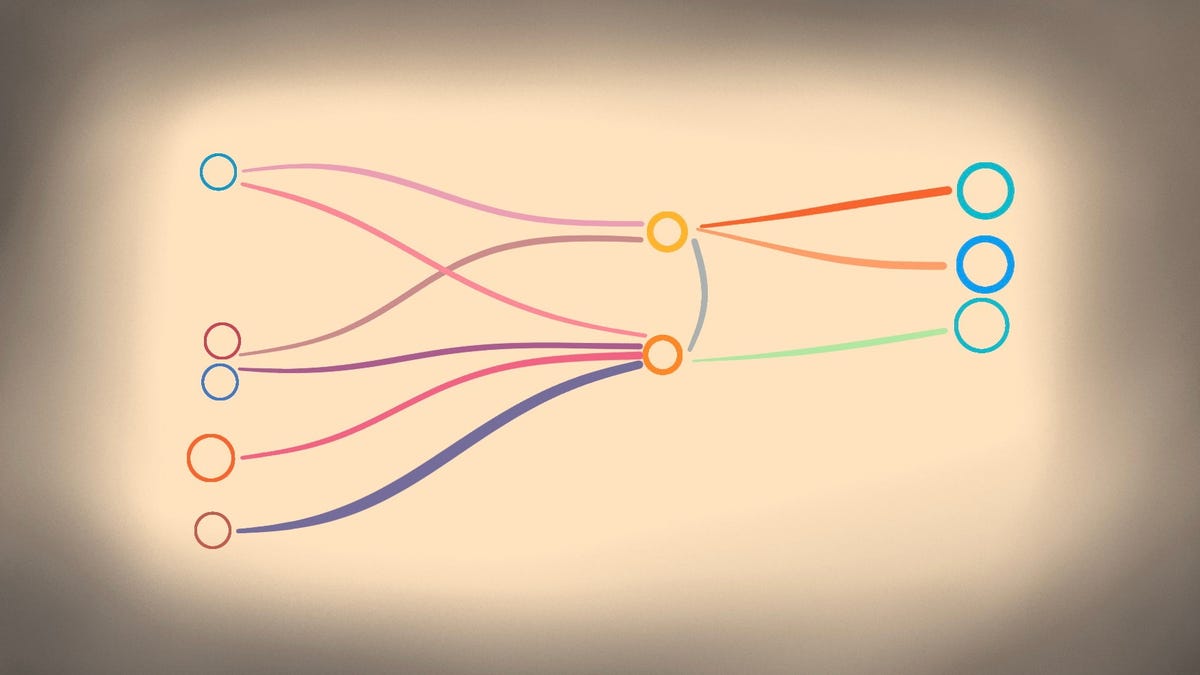

4. OpenAI has introduced a plugin architecture for ChatGPT, allowing it to access live data from the web and interact with specific websites, expanding its capabilities.

5. The integration of plugins, such as Wolfram|Alpha, enhances ChatGPT's ability to provide accurate and detailed information, bridging the gap between statistical and symbolic approaches to AI.

Overall, the article explores the potential and challenges of AI language models like ChatGPT and the role of plugins in expanding their capabilities.

The research team at Together AI has developed a new language processing model called Llama-2-7B-32K-Instruct, which excels at understanding and responding to complex and lengthy instructions, outperforming existing models in various tasks. This advancement has significant implications for applications that require comprehensive comprehension and generation of relevant responses from intricate instructions, pushing the boundaries of natural language processing.

McKinsey has developed "Lilli," a generative AI platform that revolutionizes knowledge retrieval and utilization, reducing time and effort for consultants while generating novel insights and enhancing problem-solving capabilities.

A study found that a large language model (LLM) like ChatGPT can generate appropriate responses to patient-written ophthalmology questions, showing the potential of AI in the field.

Microsoft has introduced the "Algorithm of Thoughts," an AI training method that enhances the reasoning abilities of language models like ChatGPT, making them more efficient and human-like in problem-solving. This approach combines human intuition with algorithmic exploration to improve model performance and overcome limitations.

Perplexity.ai is building an alternative to traditional search engines by creating an "answer engine" that provides concise, accurate answers to user questions backed by curated sources, aiming to transform how we access knowledge online and challenge the dominance of search giants like Google and Bing.

AI tools from OpenAI, Microsoft, and Google are being integrated into productivity platforms like Microsoft Teams and Google Workspace, offering a wide range of AI-powered features for tasks such as text generation, image generation, and data analysis, although concerns remain regarding accuracy and cost-effectiveness.

Researchers from MIT and the MIT-IBM Watson AI Lab have developed a technique that uses computer-generated data to improve the concept understanding of vision and language models, resulting in a 10% increase in accuracy, which has potential applications in video captioning and image-based question-answering systems.

A team from MIT's Computer Science and Artificial Intelligence Laboratory (CSAIL) has developed a strategy that leverages multiple AI systems to discuss and argue with each other in order to converge on the best answer to a given question, improving the consistency and factual accuracy of language model outputs.

Using natural language prompts, DeepMind's Optimization by PROmpting (OPRO) method improves the math abilities of AI language models, such as OpenAI's ChatGPT, and achieves higher accuracy in problem-solving tasks.