### Summary

The article discusses the rapid advancement and potential risks of artificial intelligence (AI) and proposes the idea of nationalizing certain aspects of AI under a governing body called the Humane AI Commission to ensure AI is aligned with human interests.

### Facts

- AI is evolving rapidly and penetrating various aspects of American life, from image recognition to healthcare.

- AI has the potential to bring both significant benefits and risks to society.

- Transparency in AI is limited, and understanding how specific AI works is difficult.

- Congress is becoming more aware of the importance of AI and its need for regulation.

- The author proposes the creation of a governing body, the Humane AI Commission, that can control and steer AI technology to serve humanity's best interests.

- The nationalization of advanced AI models could be considered, similar to the Atomic Energy Commission's control over nuclear reactors.

- Various options, such as an AI pause or leaving AI development to the free market or current government agencies, have limitations in addressing the potential risks of AI.

- The author suggests that the United States should take a bold executive leadership approach to develop a national AI plan and ensure global AI leadership with a focus on benevolence and human-controlled AI.

### 🤖 AI Nationalization - The case to nationalize the “nuclear reactors” of AI — the world’s most advanced AI models — hinges on this question: Who do we want to control AI’s nuclear codes? Big Tech CEOs answering to a few billionaire shareholders, or the government of the United States, answering to its citizens?

### 👥 Humane AI Commission - The author proposes the creation of a Humane AI Commission, run by AI experts, to steer and control AI technology in alignment with human interests.

### ⚠️ Risks of AI - AI's rapid advancement and lack of transparency pose risks such as unpredictable behavior, potential damage to power generation, financial markets, and public health, and the potential for AI to move beyond human control.

### ⚖️ AI Regulation - The article calls for federal regulation of AI, but emphasizes the limitations of traditional regulation in addressing the fast-evolving nature of AI and the need for a larger-scale approach like nationalization.

### Summary

Artificial intelligence (AI) is a transformative technology that will reshape politics, economies, and societies, but it also poses significant challenges and risks. To effectively govern AI, policymakers should adopt a new governance framework that is precautionary, agile, inclusive, impermeable, and targeted. This framework should be built upon common principles and encompass three overlapping governance regimes: one for establishing facts and advising governments, one for preventing AI arms races, and one for managing disruptive forces. Additionally, global AI governance must move past traditional conceptions of sovereignty and invite technology companies to participate in rule-making processes.

### Facts

- **AI Progression**: AI systems have been evolving rapidly and possess the potential to self-improve and achieve quasi-autonomy. Models with trillions of parameters and brain-scale models could be viable within a few years.

- **Dual Use**: AI is dual-use, meaning it has both military and civilian applications. The boundaries between the two are blurred, and AI can be used to create and spread misinformation, conduct surveillance, and produce powerful weapons.

- **Accessible and Proliferation Risks**: AI has become increasingly accessible and proliferated, making regulatory efforts challenging. The ease of copying AI algorithms and models poses proliferation risks, as well as the potential for misuse and unintended consequences.

- **Shift in Global Power**: AI's advancement and geopolitical competition in AI supremacy are shifting the structure and balance of global power. Technology companies are becoming powerful actors in the digital realm, challenging the authority of nation-states.

- **Inadequate Governance**: Current regulatory efforts are insufficient to govern AI effectively. There is a need for a new governance framework that is agile, inclusive, and targeted to address the unique challenges posed by AI.

- **Principles for AI Governance**: Precaution, agility, inclusivity, impermeability, and targeting are key principles for AI governance. These principles should guide the development of granular regulatory frameworks.

- **Three Overlapping Governance Regimes**: Policy frameworks should include a regime for fact-finding, advising governments on AI risks; a regime for preventing AI arms races through international cooperation and monitoring; and a regime for managing disruptive forces and crises related to AI.

### Emoji

:robot:

AI executives may be exaggerating the dangers of artificial intelligence in order to advance their own interests, according to an analysis of responses to proposed AI regulations.

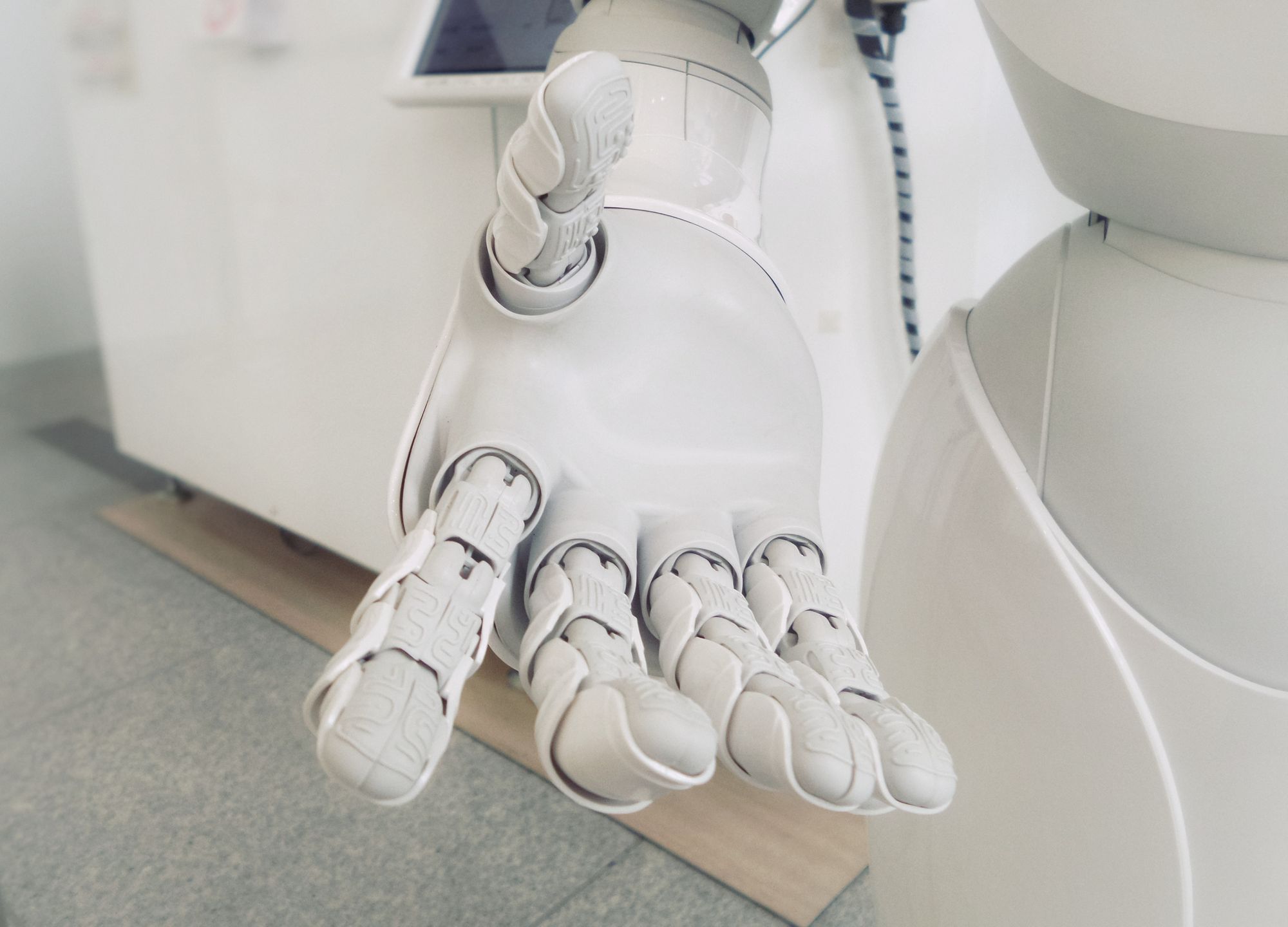

The potential impact of robotic artificial intelligence is a growing concern, as experts warn that the biggest risk comes from the manipulation of people through techniques such as neuromarketing and fake news, dividing society and eroding wisdom without the need for physical force.

The GZERO World podcast episode discusses the explosive growth and potential risks of generative AI, as well as the proposed 5 principles for effective AI governance.

Artificial intelligence should be controlled by humans to prevent its weaponization and ensure safety measures are in place, according to Microsoft's president Brad Smith. He stressed the need for regulations and laws to govern AI, comparing it to other technologies that have required safety breaks and human oversight. Additionally, Smith emphasized that AI is a tool to assist humans, not to replace them, and that it can help individuals think more efficiently.

Artificial intelligence expert Michael Wooldridge is not worried about the growth of AI, but is concerned about the potential for AI to become a controlling and invasive boss that monitors employees' every move. He emphasizes the immediate and concrete existential concerns in the world, such as the escalation of conflict in Ukraine, as more important things to worry about.

The UK government has been urged to introduce new legislation to regulate artificial intelligence (AI) in order to keep up with the European Union (EU) and the United States, as the EU advances with the AI Act and US policymakers publish frameworks for AI regulations. The government's current regulatory approach risks lagging behind the fast pace of AI development, according to a report by the science, innovation, and technology committee. The report highlights 12 governance challenges, including bias in AI systems and the production of deepfake material, that need to be addressed in order to guide the upcoming global AI safety summit at Bletchley Park.

The authors propose a framework for assessing the potential harm caused by AI systems in order to address concerns about "Killer AI" and ensure responsible integration into society.

Artificial intelligence will play a significant role in the 2024 elections, making the production of disinformation easier but ultimately having less impact than anticipated, while paranoid nationalism corrupts global politics by scaremongering and abusing power.

Several tech giants in the US, including Alphabet, Microsoft, Meta Platforms, and Amazon, have pledged to collaborate with the Biden administration to address the risks associated with artificial intelligence, focusing on safety, security, and trust in AI development.

The author suggests that developing safety standards for artificial intelligence (AI) is crucial, drawing upon his experience in ensuring safety measures for nuclear weapon systems and highlighting the need for a manageable group to define these standards.

Britain has outlined its objectives for its global AI safety summit, with a focus on understanding the risks of AI and supporting national and international frameworks, bringing together tech executives, academics, and political leaders.

Former Google executive Mustafa Suleyman warns that artificial intelligence could be used to create more lethal pandemics by giving humans access to dangerous information and allowing for experimentation with synthetic pathogens. He calls for tighter regulation to prevent the misuse of AI.

Robots have been causing harm and even killing humans for decades, and as artificial intelligence advances, the potential for harm increases, highlighting the need for regulations to ensure safe innovation and protect society.

The lack of regulation surrounding artificial intelligence in healthcare is a significant threat, according to the World Health Organization's European regional director, who highlights the need for positive regulation to prevent harm while harnessing AI's potential.

The rivalry between the US and China over artificial intelligence (AI) is intensifying as both countries compete for dominance in the emerging field, but experts suggest that cooperation on certain issues is necessary to prevent conflicts and ensure global governance of AI. While tensions remain high and trust is lacking, potential areas of cooperation include AI safety and regulations. However, failure to cooperate could increase the risk of armed conflict and hinder the exploration and governance of AI.

Artificial intelligence (AI) poses both potential benefits and risks, as experts express concern about the development of nonhuman minds that may eventually replace humanity and the need to mitigate the risk of AI-induced extinction.

Artificial intelligence poses a more imminent threat to humanity's survival than climate crisis, pandemics, or nuclear war, as discussed by philosopher Nick Bostrom and author David Runciman, who argue that challenges posed by AI can be negotiated by drawing on lessons learned from navigating state and corporate power throughout history.

Renowned historian Yuval Noah Harari warns that AI, as an "alien species," poses a significant risk to humanity's existence, as it has the potential to surpass humans in power and intelligence, leading to the end of human dominance and culture. Harari urges caution and calls for measures to regulate and control AI development and deployment.

Artificial Intelligence poses real threats due to its newness and rawness, such as ethical challenges, regulatory and legal challenges, bias and fairness issues, lack of transparency, privacy concerns, safety and security risks, energy consumption, data privacy and ownership, job loss or displacement, explainability problems, and managing hype and expectations.

Tech heavyweights, including Elon Musk, Mark Zuckerberg, and Sundar Pichai, expressed overwhelming consensus for the regulation of artificial intelligence during a closed-door meeting with US lawmakers convened to discuss the potential risks and benefits of AI technology.

The UK government is showing increased concern about the potential risks of artificial intelligence (AI) and the influence of the "Effective Altruism" (EA) movement, which warns of the existential dangers of super-intelligent AI and advocates for long-term policy planning; critics argue that the focus on future risks distracts from the real ethical challenges of AI in the present and raises concerns of regulatory capture by vested interests.

Artificial intelligence poses an existential threat to humanity if left unregulated and on its current path, according to technology ethicist Tristan Harris.

The United Nations is urging the international community to confront the potential risks and benefits of Artificial Intelligence, which has the power to transform the world.

Artificial intelligence (AI) has become the new focus of concern for tech-ethicists, surpassing social media and smartphones, with exaggerated claims of AI's potential to cause the extinction of the human race. These fear-mongering tactics and populist misinformation have garnered attention and book deals for some, but are lacking in nuance and overlook the potential benefits of AI.

President Biden has called for the governance of artificial intelligence to ensure it is used as a tool of opportunity and not as a weapon of oppression, emphasizing the need for international collaboration and regulation in this area.

A new poll reveals that 63% of American voters believe regulation should actively prevent the development of superintelligent AI, challenging the assumption that artificial general intelligence (AGI) should exist. The public is increasingly questioning the potential risks and costs associated with AGI, highlighting the need for democratic input and oversight in the development of transformative technologies.

Artificial intelligence (AI) is advancing rapidly, but current AI systems still have limitations and do not pose an immediate threat of taking over the world, although there are real concerns about issues like disinformation and defamation, according to Stuart Russell, a professor of computer science at UC Berkeley. He argues that the alignment problem, or the challenge of programming AI systems with the right goals, is a critical issue that needs to be addressed, and regulation is necessary to mitigate the potential harms of AI technology, such as the creation and distribution of deep fakes and misinformation. The development of artificial general intelligence (AGI), which surpasses human capabilities, would be the most consequential event in human history and could either transform civilization or lead to its downfall.

New developments in Artificial Intelligence (AI) have the potential to revolutionize our lives and help us achieve the SDGs, but it is important to engage in discourse about the risks and create safeguards to ensure a safe and prosperous future for all.

The United States must prioritize global leadership in artificial intelligence (AI) and win the platform competition with China in order to protect national security, democracy, and economic prosperity, according to Ylli Bajraktari, the president and CEO of the Special Competitive Studies Project and former Pentagon official.

Artificial intelligence will be a significant disruptor in various aspects of our lives, bringing both positive and negative effects, including increased productivity, job disruptions, and the need for upskilling, according to billionaire investor Ray Dalio.

The creation of artificial intelligence has initiated an uncontrollable and poorly understood evolutionary process, posing potential dangers that should not be underestimated.

The U.S. government must establish regulations and enforce standards to ensure the safety and security of artificial intelligence (AI) development, including requiring developers to demonstrate the safety of their systems before deployment, according to Anthony Aguirre, the executive director and secretary of the board at the Future of Life Institute.

Deputy Prime Minister Oliver Dowden will warn the UN that artificial intelligence (AI) poses a threat to world order unless governments take action, with fears that the rapid pace of AI development could lead to job losses, misinformation, and discrimination without proper regulations in place. Dowden will call for global regulation and emphasize the importance of making rules in parallel with AI development rather than retroactively. Despite the need for regulation, experts note the complexity of reaching a quick international agreement, with meaningful input needed from smaller countries, marginalized communities, and ethnic minorities. The UK aims to take the lead in AI regulation, but there are concerns that without swift action, the European Union's AI Act could become the global standard instead.

Israeli Prime Minister Benjamin Netanyahu warned of the potential dangers of artificial intelligence (AI) and called for responsible and ethical development of AI during his speech at the United Nations General Assembly, emphasizing that nations must work together to prevent the perils of AI and ensure it brings more freedom and benefits humanity.

The UK Deputy Prime Minister has announced an AI Safety Summit to address the risks and opportunities of frontier AI, emphasizing the need for understanding and governing artificial intelligence at great speed.

The true potential of AI can only be realized when organizations prioritize judgment alongside technological advancements, as judgment will be the real competitive advantage in the age of AI.

Experts in artificial intelligence believe the development of artificial general intelligence (AGI), which refers to AI systems that can perform tasks at or above human level, is approaching rapidly, raising concerns about its potential risks and the need for safety regulations. However, there are also contrasting views, with some suggesting that the focus on AGI is exaggerated as a means to regulate and consolidate the market. The threat of AGI includes concerns about its uncontrollability, potential for autonomous improvement, and its ability to refuse to be switched off or combine with other AIs. Additionally, there are worries about the manipulation of AI models below AGI level by rogue actors for nefarious purposes such as bioweapons.

The concerns of the general public regarding artificial intelligence (AI) differ from those of elites, with job loss and national security being their top concerns rather than killer robots and bias algorithms.

Artificial intelligence (AI) can be a positive force for democracy, particularly in combatting hate speech, but public trust should be reserved until the technology is better understood and regulated, according to Nick Clegg, President of Global Affairs for Meta.

Artificial intelligence (AI) has become an undeniable force in our lives, with wide-ranging implications and ethical considerations, posing both benefits and potential harms, and raising questions about regulation and the future of humanity's relationship with AI.

An organization dedicated to the safe development of artificial intelligence has released a breakthrough paper on understanding and controlling AI systems to mitigate risks such as deception and bias.

Separate negotiations on artificial intelligence in Brussels and Washington highlight the tension between prioritizing short-term risks and long-term problems in AI governance.