Minnesota's Secretary of State, Steve Simon, expresses concern over the potential impact of AI-generated deepfakes on elections, as they can spread false information and distort reality, prompting the need for new laws and enforcement measures.

The proliferation of deepfake videos and audio, fueled by the AI arms race, is impacting businesses by increasing the risk of fraud, cyberattacks, and reputational damage, according to a report by KPMG. Scammers are using deepfakes to deceive people, manipulate company representatives, and swindle money from firms, highlighting the need for vigilance and cybersecurity measures in the face of this threat.

Hollywood actors are on strike over concerns that AI technology could be used to digitally replicate their image without fair compensation. British actor Stephen Fry, among other famous actors, warns of the potential harm of AI in the film industry, specifically the use of deepfake technology.

Deepfakes, which are fake videos or images created by AI, pose a real risk to markets, as they can manipulate financial markets and target businesses with scams; however, the most significant negative impact lies in the creation of deepfake pornography, particularly non-consensual explicit content, which causes emotional and physical harm to victims and raises concerns about privacy, consent, and exploitation.

AI-generated deepfakes pose serious challenges for policymakers, as they can be used for political propaganda, incite violence, create conflicts, and undermine democracy, highlighting the need for regulation and control over AI technology.

Deepfake images and videos created by AI are becoming increasingly prevalent, posing significant threats to society, democracy, and scientific research as they can spread misinformation and be used for malicious purposes; researchers are developing tools to detect and tag synthetic content, but education, regulation, and responsible behavior by technology companies are also needed to address this growing issue.

Artificial intelligence (AI) has the potential to facilitate deceptive practices such as deepfake videos and misleading ads, posing a threat to American democracy, according to experts who testified before the U.S. Senate Rules Committee.

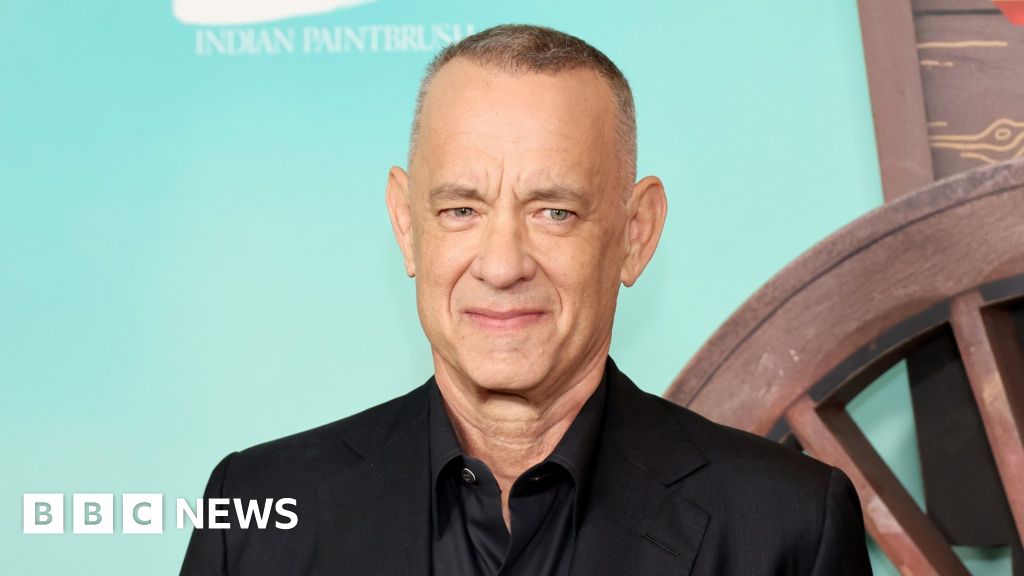

Actor Tom Hanks warned on Instagram about an AI-generated version of himself being used without his consent to promote a dental plan, highlighting the concerns surrounding AI and the potential for it to "replace" actors or digitally recreate their likeness.

Tom Hanks denounces an ad featuring an AI version of him selling dental insurance, highlighting the unethical use of AI-generated content.

Tom Hanks warns about the spread of fake information and deepfake technology, highlighting the legal and artistic challenges posed by AI-generated content featuring an actor's likeness and voice.

Tom Hanks and Gayle King have warned their social media followers about fraudulent advertisements that use artificial intelligence versions of themselves without their consent.

AI technology is making advancements in various fields such as real estate analysis, fighter pilot helmets, and surveillance tools, while Tom Hanks warns fans about a scam using his name.

A deepfake MrBeast ad slipped past TikTok's ad moderation technology, highlighting the challenge social media platforms face in handling the rise of AI deepfakes.

Deepfake videos featuring celebrities like Gayle King, Tom Hanks, and Elon Musk have prompted concerns about the misuse of AI technology, leading to calls for legislation and ethical considerations in their creation and dissemination. Celebrities have denounced these AI-generated videos as inauthentic and misleading, emphasizing the need for legal protection and labeling of such content.

Tom Hanks expresses his displeasure after an AI-generated twin of himself is used to promote a dental plan without his permission, highlighting the growing concern of unauthorized use of celebrities' likeness and the blurry lines between reality and digital fabrication.

American venture capitalist Tim Draper warns that scammers are using AI to create deepfake videos and voices in order to scam crypto users.

Artificial intelligence (AI) is increasingly being used to create fake audio and video content for political ads, raising concerns about the potential for misinformation and manipulation in elections. While some states have enacted laws against deepfake content, federal regulations are limited, and there are debates about the balance between regulation and free speech rights. Experts advise viewers to be skeptical of AI-generated content and look for inconsistencies in audio and visual cues to identify fakes. Larger ad firms are generally cautious about engaging in such practices, but anonymous individuals can easily create and disseminate deceptive content.