The main topic is the potential impact of AI on video editing and its implications for the future.

Key points include:

- The fear of AI being used to manipulate videos and create fake content during elections.

- The advancements in video editing software, such as Photoleap and Videoleap, that utilize AI technology.

- The interview with Zeev Farbman, co-founder and CEO of Lightricks, who discusses the current state and future potential of AI in video editing.

- The comparison of AI to a tool like dynamite, highlighting the lack of regulation surrounding AI.

- The assertion that AI video editing is a continuation of what has already been done with photo AI.

- The claim that the world of image creation is almost a solved problem, but user interfaces and controls still need improvement.

- The mention of current consumer AI videos that lack consistency and realism.

- The anticipation of rapid changes in AI video editing technology.

Main Topic: Increasing use of AI in manipulative information campaigns online.

Key Points:

1. Mandiant has observed the use of AI-generated content in politically-motivated online influence campaigns since 2019.

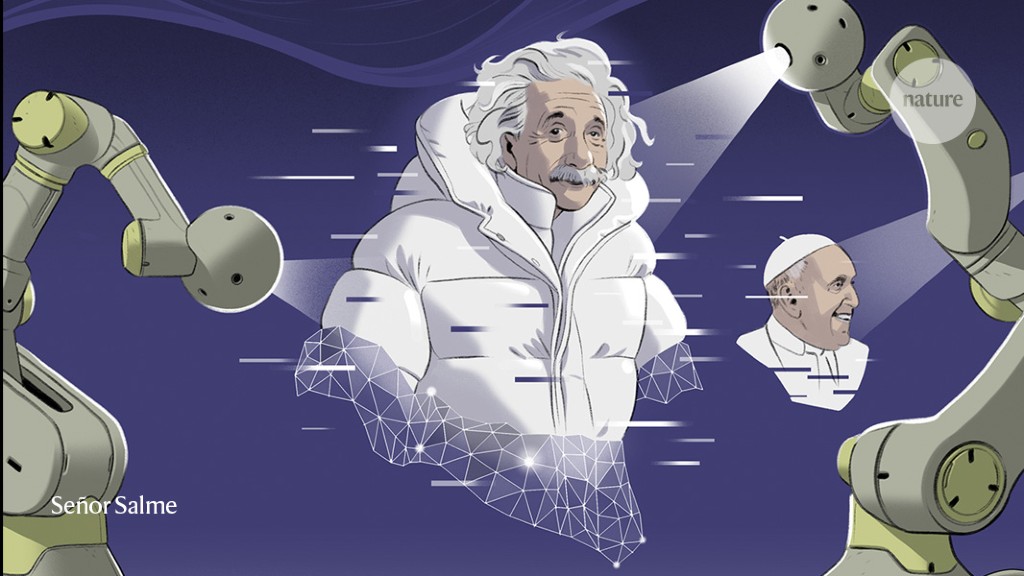

2. Generative AI models make it easier to create convincing fake videos, images, text, and code, posing a threat.

3. While the impact of these campaigns has been limited so far, AI's role in digital intrusions is expected to grow in the future.

### Summary

The rise of generative artificial intelligence (AI) is making it difficult for the public to differentiate between real and fake content, raising concerns about deceptive fake political content in the upcoming 2024 presidential race. However, the Content Authenticity Initiative is working on a digital standard to restore trust in online content.

### Facts

- Generative AI is capable of producing hyper-realistic fake content, including text, images, audio, and video.

- Tools using AI have been used to create deceptive political content, such as images of President Joe Biden in a Republican Party ad and a fabricated voice of former President Donald Trump endorsing Florida Gov. Ron DeSantis' White House bid.

- The Content Authenticity Initiative, a coalition of companies, is developing a digital standard to restore trust in online content.

- Truepic, a company involved in the initiative, uses camera technology to add verified content provenance information to images, helping to verify their authenticity.

- The initiative aims to display "content credentials" that provide information about the history of a piece of content, including how it was captured and edited.

- The hope is for widespread adoption of the standard by creators to differentiate authentic content from manipulated content.

- Adobe is having conversations with social media platforms to implement the new content credentials, but no platforms have joined the initiative yet.

- Experts are concerned that generative AI could further erode trust in information ecosystems and potentially impact democratic processes, highlighting the importance of industry-wide change.

- Regulators and lawmakers are engaging in conversations and discussions about addressing the challenges posed by AI-generated fake content.

Google DeepMind has commissioned 13 artists to create diverse and accessible art and imagery that aims to change the public’s perception of AI, countering the unrealistic and misleading stereotypes often used to represent the technology. The artwork visualizes key themes related to AI, such as artificial general intelligence, chip design, digital biology, large image models, language models, and the synergy between neuroscience and AI, and it is openly available for download.

The proliferation of deepfake videos and audio, fueled by the AI arms race, is impacting businesses by increasing the risk of fraud, cyberattacks, and reputational damage, according to a report by KPMG. Scammers are using deepfakes to deceive people, manipulate company representatives, and swindle money from firms, highlighting the need for vigilance and cybersecurity measures in the face of this threat.

AI Algorithms Battle Russian Disinformation Campaigns on Social Media

A mysterious individual known as Nea Paw has developed an AI-powered project called CounterCloud to combat mass-produced AI disinformation. In response to tweets from Russian media outlets and the Chinese embassy that criticized the US, CounterCloud produced tweets, articles, and even journalists and news sites that were entirely generated by AI algorithms. Paw believes that the project highlights the danger of easily accessible generative AI tools being used for state-backed propaganda. While some argue that educating users about manipulative AI-generated content or equipping browsers with AI-detection tools could mitigate the issue, Paw believes that these solutions are not effective or elegant. Disinformation researchers have long warned about the potential of AI language models being used for personalized propaganda campaigns and influencing social media users. Evidence of AI-powered disinformation campaigns has already emerged, with academic researchers uncovering a botnet powered by AI language model ChatGPT. Legitimate political campaigns, such as the Republican National Committee, have also utilized AI-generated content, including fake images. AI-generated text can still be fairly generic, but with human finesse, it becomes highly effective and difficult to detect using automated filters. OpenAI has expressed concern about its technology being utilized to create tailored automated disinformation at a large scale, and while it has updated its policies to restrict political usage, it remains a challenge to block the generation of such material effectively. As AI tools become increasingly accessible, society must become aware of their presence in politics and protect against their misuse.

Google is trialling a digital watermark called SynthID to identify images made by artificial intelligence (AI) in order to combat disinformation and copyright infringement, as the line between real and AI-generated images becomes blurred.

Fake videos of celebrities promoting phony services, created using deepfake technology, have emerged on major social media platforms like Facebook, TikTok, and YouTube, sparking concerns about scams and the manipulation of online content.

AI-generated videos are targeting children online, raising concerns about their safety, while there are also worries about AI causing job losses and becoming oppressive bosses; however, AI has the potential to protect critical infrastructure and extend human life.

AI technology is making it easier and cheaper to produce mass-scale propaganda campaigns and disinformation, using generative AI tools to create convincing articles, tweets, and even journalist profiles, raising concerns about the spread of AI-powered fake content and the need for mitigation strategies.

Google has updated its political advertising policies to require politicians to disclose the use of synthetic or AI-generated images or videos in their ads, aiming to prevent the spread of deepfakes and deceptive content.

AI on social media platforms, both as a tool for manipulation and for detection, is seen as a potential threat to voter sentiment in the upcoming US presidential elections, with China-affiliated actors leveraging AI-generated visual media to emphasize politically divisive topics, while companies like Accrete AI are employing AI to detect and predict disinformation threats in real-time.

Deepfake audio technology, which can generate realistic but false recordings, poses a significant threat to democratic processes by enabling underhanded political tactics and the spread of disinformation, with experts warning that it will be difficult to distinguish between real and fake recordings and that the impact on partisan voters may be minimal. While efforts are being made to develop proactive standards and detection methods to mitigate the damage caused by deepfakes, the industry and governments face challenges in regulating their use effectively, and the widespread dissemination of disinformation remains a concern.

With the rise of AI-generated "Deep Fakes," there is a clear and present danger of these manipulated videos and photos being used to deceive voters in the upcoming elections, making it crucial to combat this disinformation for the sake of election integrity and national security.

A surge in AI-generated child sexual abuse material (CSAM) circulating online has been observed by the Internet Watch Foundation (IWF), raising concerns about the ability to identify and protect real children in need. Efforts are being made by law enforcement and policymakers to address the growing issue of deepfake content created using generative AI platforms, including the introduction of legislation in the US to prevent the use of deceptive AI in elections.

The generative AI boom has led to a "shadow war for data," as AI companies scrape information from the internet without permission, sparking a backlash among content creators and raising concerns about copyright and licensing in the AI world.

Hollywood actors are on strike over concerns that AI technology could be used to digitally replicate their image without fair compensation. British actor Stephen Fry, among other famous actors, warns of the potential harm of AI in the film industry, specifically the use of deepfake technology.

Adversaries and criminal groups are exploiting artificial intelligence (AI) technology to carry out malicious activities, according to FBI Director Christopher Wray, who warned that while AI can automate tasks for law-abiding citizens, it also enables the creation of deepfakes and malicious code, posing a threat to US citizens. The FBI is working to identify and track those misusing AI, but is cautious about using it themselves. Other US security agencies, however, are already utilizing AI to combat various threats, while concerns about China's use of AI for misinformation and propaganda are growing.

AI-generated deepfakes pose serious challenges for policymakers, as they can be used for political propaganda, incite violence, create conflicts, and undermine democracy, highlighting the need for regulation and control over AI technology.

As AI technology progresses, creators are concerned about the potential misuse and exploitation of their work, leading to a loss of trust and a polluted digital public space filled with untrustworthy content.

Criminals are increasingly using artificial intelligence, including deepfakes and voice cloning, to carry out scams and deceive people online, posing a significant threat to online security.

AI-generated images have the potential to create alternative history and misinformation, raising concerns about their impact on elections and people's ability to discern truth from manipulated visuals.

Artificial intelligence (AI) has the potential to facilitate deceptive practices such as deepfake videos and misleading ads, posing a threat to American democracy, according to experts who testified before the U.S. Senate Rules Committee.

Deep Render, a startup specializing in AI video compression technology, aims to significantly reduce video file sizes by up to 50 times, addressing the challenge of transferring data-heavy, high-quality videos over the internet. The company's AI-driven compression pipeline traces every single pixel in the frame sequence, offering improved image and audio quality while reducing bandwidth constraints.

The reliability of digital watermarking techniques used by tech giants like Google and OpenAI to identify and distinguish AI-generated content from human-made content has been questioned by researchers at the University of Maryland. Their findings suggest that watermarking may not be an effective defense against deepfakes and misinformation.

AI-altered images of celebrities are being used to promote products without their consent, raising concerns about the misuse of artificial intelligence and the need for regulations to protect individuals from unauthorized AI-generated content.

The use of AI, including deepfakes, by political leaders around the world is on the rise, with at least 16 countries deploying deepfakes for political gain, according to a report from Freedom House, leading to concerns over the spread of disinformation, censorship, and the undermining of public trust in the democratic process.

Microsoft has integrated OpenAI's DALL-E 3 model into its Bing Image Creator and Chat services, adding an invisible watermark to AI-generated images, as experts warn of the risks of generative AI tools being used for disinformation; however, some researchers question the effectiveness of watermarking in combating deepfakes and misinformation.

Artificial Intelligence is being misused by cybercriminals to create scam emails, text messages, and malicious code, making cybercrime more scalable and profitable. However, the current level of AI technology is not yet advanced enough to be widely used for deepfake scams, although there is a potential future threat. In the meantime, individuals should remain skeptical of suspicious messages and avoid rushing to provide personal information or send money. AI can also be used by the "good guys" to develop software that detects and blocks potential fraud.

AI-generated stickers are causing controversy as users create obscene and offensive images, Microsoft Bing's image generation feature leads to pictures of celebrities and video game characters committing the 9/11 attacks, a person is injured by a Cruise robotaxi, and a new report reveals the weaponization of AI by autocratic governments. On another note, there is a growing concern among artists about their survival in a market where AI replaces them, and an interview highlights how AI is aiding government censorship and fueling disinformation campaigns.

U.K. startup Yepic AI, which claims to use "deepfakes for good," violated its own ethics policy by creating and sharing deepfaked videos of a TechCrunch reporter without their consent. They have now stated that they will update their ethics policy.

The AI industry's environmental impact may be worse than previously thought, as a new study suggests that its energy needs could soon match those of a small country, prompting questions about the justification for generative AI technologies like ChatGPT and their contribution to climate change. Meanwhile, the music industry is pushing for legal protections against the unauthorized use of AI deepfakes replicating artists' visual or audio likenesses.

Some AI programs are incorrectly labeling real photographs from the war in Israel and Palestine as fake, highlighting the limitations and inaccuracies of current AI image detection tools.

Deepfake AI technology is posing a new threat in the Israel-Gaza conflict, as it allows for the creation of manipulated videos that can spread misinformation and alter public perception. This has prompted media outlets like CBS to develop capabilities to handle deepfakes, but many still underestimate the extent of the threat. Israeli startup Clarity, which focuses on AI Collective Intelligence Engine, is working to tackle the deepfake challenge and protect against the potential manipulation of public opinion.

American venture capitalist Tim Draper warns that scammers are using AI to create deepfake videos and voices in order to scam crypto users.

A recent analysis found that the majority of deepfake videos online are pornography, with a 550% increase in deepfake videos this year compared to 2019, and deepfake pornography making up 98% of all deepfake videos.

Artificial intelligence (AI) is increasingly being used to create fake audio and video content for political ads, raising concerns about the potential for misinformation and manipulation in elections. While some states have enacted laws against deepfake content, federal regulations are limited, and there are debates about the balance between regulation and free speech rights. Experts advise viewers to be skeptical of AI-generated content and look for inconsistencies in audio and visual cues to identify fakes. Larger ad firms are generally cautious about engaging in such practices, but anonymous individuals can easily create and disseminate deceptive content.

Deepfake visuals created by artificial intelligence (AI) are expected to complicate the Israeli-Palestinian conflict, as Hamas and other factions have been known to manipulate images and generate fake news to control the narrative in the Gaza Strip. While AI-generated deepfakes can be difficult to detect, there are still tell-tale signs that set them apart from real images.

A new report from the Internet Watch Foundation reveals that offenders are utilizing AI-generated images to create and distribute child sexual abuse material, with nearly 3,000 AI-generated images found to be illegal under UK law.

The Internet Watch Foundation has warned that artificial intelligence-generated child sexual abuse images are becoming a reality and could overwhelm the internet, with nearly 3,000 AI-made abuse images breaking UK law and existing images of real-life abuse victims being built into AI models to produce new depictions of them. AI technology is also being used to create images of celebrities de-aged and depicted as children in sexual abuse scenarios, as well as "nudifying" pictures of clothed children found online. The IWF fears that this influx of AI-generated CSAM will distract from the detection of real abuse and assistance for victims.

Artificial intelligence and deepfakes are posing a significant challenge in the fight against misinformation during times of war, as demonstrated by the Russo-Ukrainian War, where AI-generated videos created confusion and distrust among the public and news media, even if they were eventually debunked. However, there is a need for deepfake literacy in the media and the general public to better discern real from fake content, as public trust in all media from conflicts may be eroded.

Free and cheap AI tools are enabling the creation of fake AI celebrities and content, leading to an increase in fraud and false endorsements, making it important for consumers to be cautious and vigilant when evaluating products and services.

The Internet Watch Foundation has warned that the proliferation of deepfake photos generated by artificial intelligence tools could exacerbate the issue of child sexual abuse images online, overwhelming law enforcement investigators and increasing the number of potential victims. The watchdog agency urges governments and technology providers to take immediate action to address the problem.

Artificial intelligence is being legally used to create images of child sexual abuse, sparking concerns over the exploitation of children and the need for stricter regulations.

The Israel-Hamas conflict is being exacerbated by artificial intelligence (AI), which is generating a flood of misinformation and propaganda on social media, making it difficult for users to discern what is real and what is fake. AI-generated images and videos are being used to spread agitative propaganda, deceive the public, and target specific groups. The rise of unregulated AI tools is an "experiment on ourselves," according to experts, and there is a lack of effective tools to quickly identify and combat AI-generated content. Social media platforms are struggling to keep up with the problem, leading to the widespread dissemination of false information.