- The AI Agenda is a new newsletter from The Information that focuses on the fast-paced world of artificial intelligence.

- The newsletter aims to provide daily insights on how AI is transforming various industries and the challenges it poses for regulators and content publishers.

- It will feature analysis from top researchers, founders, and executives, as well as provide scoops on deals and funding of key AI startups.

- The newsletter will cover advancements in AI technology such as ChatGPT and AI-generated video, and explore their impact on society.

- The goal is to provide readers with a clear understanding of the latest developments in AI and what to expect in the future.

- Social media creators are exploring the use of generative artificial intelligence (AI) to enhance their personal brands and streamline their work.

- Giselle Ugarte, a popular TikTok creator with nearly 300,000 followers, is testing AI technology to assist with onboarding new clients.

- Ugarte collaborated with Vermont startup Render Media to create a digital likeness of herself for her business.

- She spent a few hours at Render's New York studio, posing for a camera and reading scripts in different moods to capture her likeness.

- The use of AI technology in this way could potentially save creators time and effort in managing their online presence and engaging with clients.

The main topic is the emergence of AI in 2022, particularly in the areas of image and text generation. The key points are:

1. AI models like DALL-E, MidJourney, and Stable Diffusion have revolutionized image generation.

2. ChatGPT has made significant breakthroughs in text generation.

3. The history of previous tech epochs shows that disruptive innovations often come from new entrants in the market.

4. Existing companies like Apple, Amazon, Facebook, Google, and Microsoft are well-positioned to capitalize on the AI epoch.

5. Each company has its own approach to AI, with Apple focusing on local deployment, Amazon on cloud services, Meta on personalized content, Google on search, and Microsoft on productivity apps.

The main topic of the article is Kickstarter's struggle to formulate a policy regarding the use of generative AI on its platform. The key points are:

1. Generative AI tools used on Kickstarter have been trained on publicly available content without giving credit or compensation to the original creators.

2. Kickstarter is requiring projects using AI tools to disclose relevant details about how the AI content will be used and which parts are original.

3. New projects involving the development of AI tech must detail the sources of training data and implement safeguards for content creators.

4. Kickstarter's new policy will go into effect on August 29 and will be enforced through a new set of questions during project submissions.

5. Projects that do not properly disclose their use of AI may be suspended.

6. Kickstarter has been considering changes in policy around generative AI since December and has faced challenges in moderating AI works.

AI labeling, or disclosing that content was generated using artificial intelligence, is not deemed necessary by Google for ranking purposes; the search engine values quality content, user experience, and authority of the website and author more than the origin of the content. However, human editors are still crucial for verifying facts and adding a human touch to AI-generated content to ensure its quality, and as AI becomes more widespread, policies and frameworks around its use may evolve.

Generative AI is enabling the creation of fake books that mimic the writing style of established authors, raising concerns regarding copyright infringement and right of publicity issues, and prompting calls for compensation and consent from authors whose works are used to train AI tools.

The use of copyrighted material to train generative AI tools is leading to a clash between content creators and AI companies, with lawsuits being filed over alleged copyright infringement and violations of fair use. The outcome of these legal battles could have significant implications for innovation and society as a whole.

The Associated Press has released guidance on the use of AI in journalism, stating that while it will continue to experiment with the technology, it will not use it to create publishable content and images, raising questions about the trustworthiness of AI-generated news. Other news organizations have taken different approaches, with some openly embracing AI and even advertising for AI-assisted reporters, while smaller newsrooms with limited resources see AI as an opportunity to produce more local stories.

Generative AI tools are being misused by cybercriminals to drive a surge in cyberattacks, according to a report from Check Point Research, leading to an 8% spike in global cyberattacks in the second quarter of the year and making attackers more productive.

AI is being used by cybercriminals to create more powerful and authentic-looking emails, making phishing attacks more dangerous and harder to detect.

SEO professionals in 2023 and 2024 are most focused on content creation and strategy, with generative AI being a disruptive tool that can automate content development and production processes, although it has its limitations and standing out from competitors will be a challenge. AI can be leveraged effectively for repurposing existing content, automated keyword research, content analysis, optimizing content, and personalization and segmentation, but marketers should lead with authenticity, highlight their expertise, and keep experimenting to stay ahead of the competition.

Researchers at Virginia Tech have used AI and natural language processing to analyze 10 years of broadcasts and tweets from CNN and Fox News, revealing a surge in partisan and inflammatory language that influences public debates on social media and reinforces existing views, potentially driving a wedge in public discourse.

Generative AI, a technology with the potential to significantly boost productivity and add trillions of dollars to the global economy, is still in the early stages of adoption and widespread use at many companies is still years away due to concerns about data security, accuracy, and economic implications.

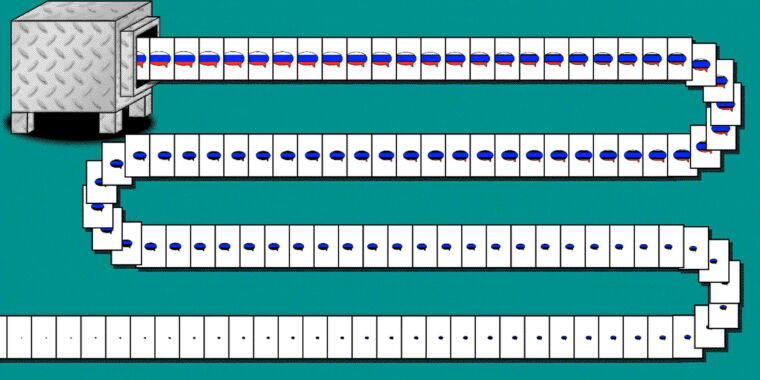

AI Algorithms Battle Russian Disinformation Campaigns on Social Media

A mysterious individual known as Nea Paw has developed an AI-powered project called CounterCloud to combat mass-produced AI disinformation. In response to tweets from Russian media outlets and the Chinese embassy that criticized the US, CounterCloud produced tweets, articles, and even journalists and news sites that were entirely generated by AI algorithms. Paw believes that the project highlights the danger of easily accessible generative AI tools being used for state-backed propaganda. While some argue that educating users about manipulative AI-generated content or equipping browsers with AI-detection tools could mitigate the issue, Paw believes that these solutions are not effective or elegant. Disinformation researchers have long warned about the potential of AI language models being used for personalized propaganda campaigns and influencing social media users. Evidence of AI-powered disinformation campaigns has already emerged, with academic researchers uncovering a botnet powered by AI language model ChatGPT. Legitimate political campaigns, such as the Republican National Committee, have also utilized AI-generated content, including fake images. AI-generated text can still be fairly generic, but with human finesse, it becomes highly effective and difficult to detect using automated filters. OpenAI has expressed concern about its technology being utilized to create tailored automated disinformation at a large scale, and while it has updated its policies to restrict political usage, it remains a challenge to block the generation of such material effectively. As AI tools become increasingly accessible, society must become aware of their presence in politics and protect against their misuse.

Deceptive generative AI-based political ads are becoming a growing concern, making it easier to sell lies and increasing the need for news organizations to understand and report on these ads.

Generative AI tools are revolutionizing the creator economy by speeding up work, automating routine tasks, enabling efficient research, facilitating language translation, and teaching creators new skills.

Generative AI is being used to create misinformation that is increasingly difficult to distinguish from reality, posing significant threats such as manipulating public opinion, disrupting democratic processes, and eroding trust, with experts advising skepticism, attention to detail, and not sharing potentially AI-generated content to combat this issue.

Google's AI-generated search result summaries, which use key points from news articles, are facing criticism for potentially incentivizing media organizations to put their work behind paywalls and leading to accusations of theft. Media companies are concerned about the impact on their credibility and revenue, prompting some to seek payment from AI companies to train language models on their content. However, these generative AI models are not perfect and require user feedback to improve accuracy and avoid errors.

Generative artificial intelligence (AI) tools, such as ChatGPT, have the potential to supercharge disinformation campaigns in the 2024 elections, increasing the quantity, quality, and personalization of false information distributed to voters, but there are limitations to their effectiveness and platforms are working to mitigate the risks.

Dezeen, an online architecture and design resource, has outlined its policy on the use of artificial intelligence (AI) in text and image generation, stating that while they embrace new technology, they do not publish stories that use AI-generated text unless it is focused on AI and clearly labeled as such, and they favor publishing human-authored illustrations over AI-generated images.

AI-generated deepfakes have the potential to manipulate elections, but research suggests that the polarized state of American politics may actually inoculate voters against misinformation regardless of its source.

A developer has created an AI-powered propaganda machine called CounterCloud, using OpenAI tools like ChatGPT, to demonstrate how easy and inexpensive it is to generate mass propaganda. The system can autonomously generate convincing content 90% of the time and poses a threat to democracy by spreading disinformation online.

Generative artificial intelligence, particularly large language models, has the potential to revolutionize various industries and add trillions of dollars of value to the global economy, according to experts, as Chinese companies invest in developing their own AI models and promoting their commercial use.

Generative AI tools are causing concerns in the tech industry as they produce unreliable and low-quality content on the web, leading to issues of authorship, incorrect information, and potential information crisis.

Generative AI is increasingly being used in marketing, with 73% of marketing professionals already utilizing it to create text, images, videos, and other content, offering benefits such as improved performance, creative variations, cost-effectiveness, and faster creative cycles. Marketers need to embrace generative AI or risk falling behind their competitors, as it revolutionizes various aspects of marketing creatives. While AI will enhance efficiency, humans will still be needed for strategic direction and quality control.

Google will require political ads to disclose if they contain synthetic content created using artificial intelligence (AI) in an effort to combat the spread of disinformation during the upcoming US presidential election.

Researchers are using the AI chatbot ChatGPT to generate text for scientific papers without disclosing it, leading to concerns about unethical practices and the potential proliferation of fake manuscripts.

AI on social media platforms, both as a tool for manipulation and for detection, is seen as a potential threat to voter sentiment in the upcoming US presidential elections, with China-affiliated actors leveraging AI-generated visual media to emphasize politically divisive topics, while companies like Accrete AI are employing AI to detect and predict disinformation threats in real-time.

Artificial intelligence (AI) poses a high risk to the integrity of the election process, as evidenced by the use of AI-generated content in politics today, and there is a need for stronger content moderation policies and proactive measures to combat the use of AI in coordinated disinformation campaigns.

China is employing artificial intelligence to manipulate American voters through the dissemination of AI-generated visuals and content, according to a report by Microsoft.

Researchers have admitted to using a chatbot to help draft an article, leading to the retraction of the paper and raising concerns about the infiltration of generative AI in academia.

More than half of Americans believe that misinformation spread by artificial intelligence (AI) will impact the outcome of the 2024 presidential election, with supporters of both former President Trump and President Biden expressing concerns about the influence of AI on election results.

The iconic entertainment site, The A.V. Club, received backlash for publishing AI-generated articles that were found to be copied verbatim from IMDb, raising concerns about the use of AI in journalism and its potential impact on human jobs.

A surge in AI-generated child sexual abuse material (CSAM) circulating online has been observed by the Internet Watch Foundation (IWF), raising concerns about the ability to identify and protect real children in need. Efforts are being made by law enforcement and policymakers to address the growing issue of deepfake content created using generative AI platforms, including the introduction of legislation in the US to prevent the use of deceptive AI in elections.

The generative AI boom has led to a "shadow war for data," as AI companies scrape information from the internet without permission, sparking a backlash among content creators and raising concerns about copyright and licensing in the AI world.

Artificial intelligence should not be used in journalism due to the potential for generating fake news, undermining the principles of journalism, and threatening the livelihood of human journalists.

Conversational AI and generative AI are two branches of AI with distinct differences and capabilities, but they can also work together to shape the digital landscape by enabling more natural interactions and creating new content.

AI technology, particularly generative language models, is starting to replace human writers, with the author of this article experiencing firsthand the impact of AI on his own job and the writing industry as a whole.

Generative AI is empowering fraudsters with sophisticated new tools, enabling them to produce convincing scam texts, clone voices, and manipulate videos, posing serious threats to individuals and businesses.

Artificial intelligence (AI) has become the new focus of concern for tech-ethicists, surpassing social media and smartphones, with exaggerated claims of AI's potential to cause the extinction of the human race. These fear-mongering tactics and populist misinformation have garnered attention and book deals for some, but are lacking in nuance and overlook the potential benefits of AI.

Generative AI is a form of artificial intelligence that can create various forms of content, such as images, text, music, and virtual worlds, by learning patterns and rules from existing data, and its emergence raises ethical questions regarding authenticity, intellectual property, and job displacement.

English actor and broadcaster Stephen Fry expresses concerns over AI and its potential impact on the entertainment industry, citing examples of his own voice being duplicated for a documentary without his knowledge or consent, and warns that the technology could be used for more dangerous purposes such as generating explicit content or manipulating political speeches.

AI-generated content is becoming increasingly prevalent in political campaigns and poses a significant threat to democratic processes as it can be used to spread misinformation and disinformation to manipulate voters.

The use of generative AI poses risks to businesses, including the potential exposure of sensitive information, the generation of false information, and the potential for biased or toxic responses from chatbots. Additionally, copyright concerns and the complexity of these systems further complicate the landscape.