Main topic: The demand for computer chips to train AI models and its impact on startups.

Key points:

1. The surge in demand for AI training has created a need for access to GPUs, leading to a shortage and high costs.

2. Startups prefer using cloud providers for access to GPUs due to the high costs of building their own infrastructure.

3. The reliance on Nvidia as the main provider of AI training hardware has contributed to the scarcity and expense of GPUs, causing startups to explore alternative options.

Main topic: Shortage of GPUs and its impact on AI startups

Key points:

1. The global rush to integrate AI into apps and programs, combined with lingering manufacturing challenges, has resulted in shortages of GPUs.

2. Shortages of ideal GPUs at main cloud computing vendors have caused AI startups to use more powerful and expensive GPUs, leading to increased costs.

3. Companies are innovating and seeking alternative solutions to maintain access to GPUs, including optimization techniques and partnerships with alternative cloud providers.

Nvidia has reported explosive sales growth for AI GPU chips, which has significant implications for Advanced Micro Devices as they prepare to release a competing chip in Q4. Analysts believe that AMD's growth targets for AI GPU chips are too low and that they have the potential to capture a meaningful market share from Nvidia.

Nvidia's CEO, Jensen Huang, predicts that upgrading data centers for AI, which includes the cost of expensive GPUs, will amount to $1 trillion over the next 4 years, with cloud providers like Amazon, Google, Microsoft, and Meta expected to shoulder a significant portion of this bill.

Chinese GPU developers are looking to fill the void in their domestic market created by US restrictions on AI and HPC exports to China, with companies like ILuvatar CoreX and Moore Threads collaborating with local cloud computing providers to run their LLM services and shift their focus from gaming hardware to the data center business.

Major technology firms, including Microsoft, face a shortage of GPUs, particularly from Nvidia, which could hinder their ability to maximize AI-generated revenue in the coming year.

GPUs are well-suited for AI applications because they efficiently work with large amounts of memory, similar to a fleet of trucks working in parallel to hide latency.

Taiwan Semiconductor Manufacturing Co. (TSMC) expects supply constraints on AI chips to ease in about 18 months, with Chairman Mark Liu stating that the squeeze is temporary and could be alleviated by the end of 2024.

TSMC has warned that sourcing high-end GPUs from Nvidia will remain difficult until at least the end of 2024 due to a lack of advanced packaging capacity, affecting not only Nvidia but also AMD's upcoming Instinct MI300-series accelerators that rely on the same packaging technology.

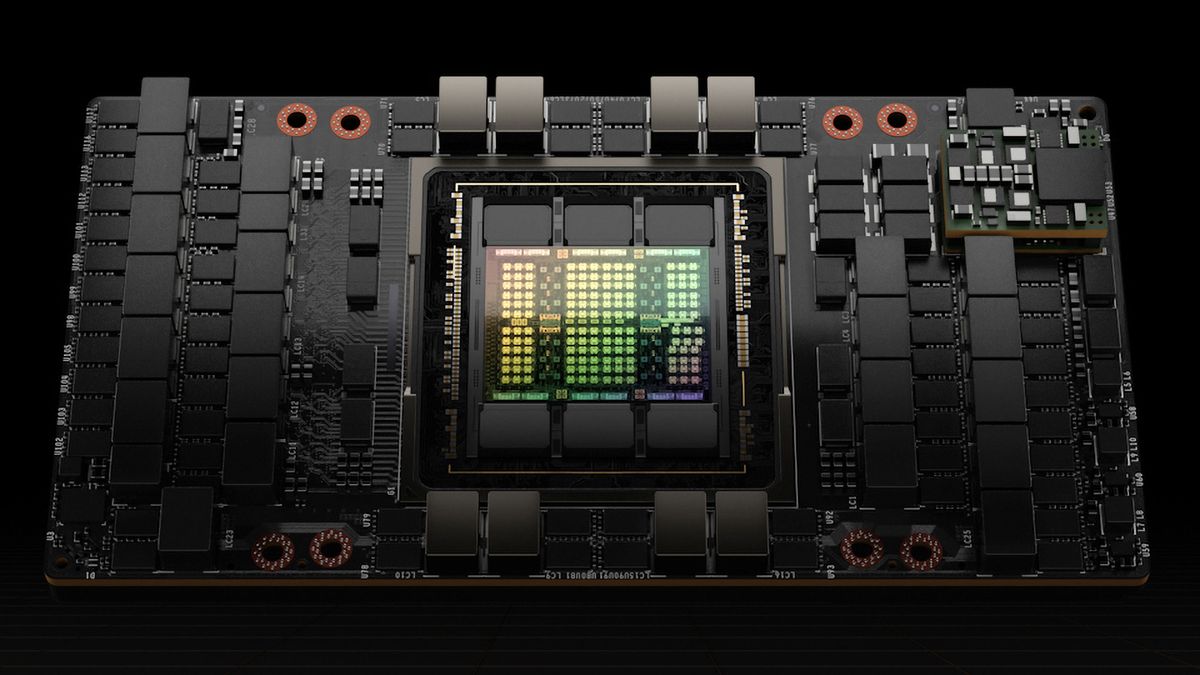

Nvidia's success in the AI industry can be attributed to their graphical processing units (GPUs), which have become crucial tools for AI development, as they possess the ability to perform parallel processing and complex mathematical operations at a rapid pace. However, the long-term market for AI remains uncertain, and Nvidia's dominance may not be guaranteed indefinitely.

Despite a significant decline in PC graphics card shipments due to the pandemic, Advanced Micro Devices (AMD) sees a glimmer of hope as shipments increase by 3% from the previous quarter, indicating a potential bottoming out of demand, while its data center GPU business is expected to thrive in the second half of the year due to increased interest and sales in AI workloads.

The server market is experiencing a shift towards GPUs, particularly for AI processing work, leading to a decline in server shipments but an increase in average prices; however, this investment in GPU systems has raised concerns about sustainability and carbon emissions.

Intel is integrating AI inferencing engines into its processors with the goal of shipping 100 million "AI PCs" by 2025, as part of its effort to establish local AI on the PC as a new market and eliminate the need for cloud-based AI applications.

Nvidia's supply of compute GPUs for AI and high-performance computing applications is improving, according to Microsoft's CTO, Kevin Scott, due to the easing demand and Nvidia's plans to increase supply in the coming year.