The article discusses Google's recent keynote at Google I/O and its focus on AI. It highlights the poor presentation and lack of new content during the event. The author reflects on Google's previous success in AI and its potential to excel in this field. The article also explores the concept of AI as a sustaining innovation for big tech companies and the challenges they may face. It discusses the potential impact of AI regulations in the EU and the role of open source models in the AI landscape. The author concludes by suggesting that the battle between centralized models and open source AI may be the defining war of the digital era.

This article discusses the recent advancements in AI language models, particularly OpenAI's ChatGPT. It explores the concept of hallucination in AI and the ability of these models to make predictions. The article also introduces the new plugin architecture for ChatGPT, which allows it to access live data from the web and interact with specific websites. The integration of plugins, such as Wolfram|Alpha, enhances the capabilities of ChatGPT and improves its ability to provide accurate answers. The article highlights the potential opportunities and risks associated with these advancements in AI.

The main topic of the article is the development of AI language models, specifically ChatGPT, and the introduction of plugins that expand its capabilities. The key points are:

1. ChatGPT, an AI language model, has the ability to simulate ongoing conversations and make accurate predictions based on context.

2. The author discusses the concept of intelligence and how it relates to the ability to make predictions, as proposed by Jeff Hawkins.

3. The article highlights the limitations of AI language models, such as ChatGPT, in answering precise and specific questions.

4. OpenAI has introduced a plugin architecture for ChatGPT, allowing it to access live data from the web and interact with specific websites, expanding its capabilities.

5. The integration of plugins, such as Wolfram|Alpha, enhances ChatGPT's ability to provide accurate and detailed information, bridging the gap between statistical and symbolic approaches to AI.

Overall, the article explores the potential and challenges of AI language models like ChatGPT and the role of plugins in expanding their capabilities.

Over half of participants using AI at work experienced a 30% increase in productivity, and there are beginner-friendly ways to integrate generative AI into existing tools such as GrammarlyGo, Slack apps like DailyBot and Felix, and Canva's AI-powered design tools.

The rapid development of AI technology, exemplified by OpenAI's ChatGPT, has raised concerns about the potential societal impacts and ethical implications, highlighting the need for responsible AI development and regulation to mitigate these risks.

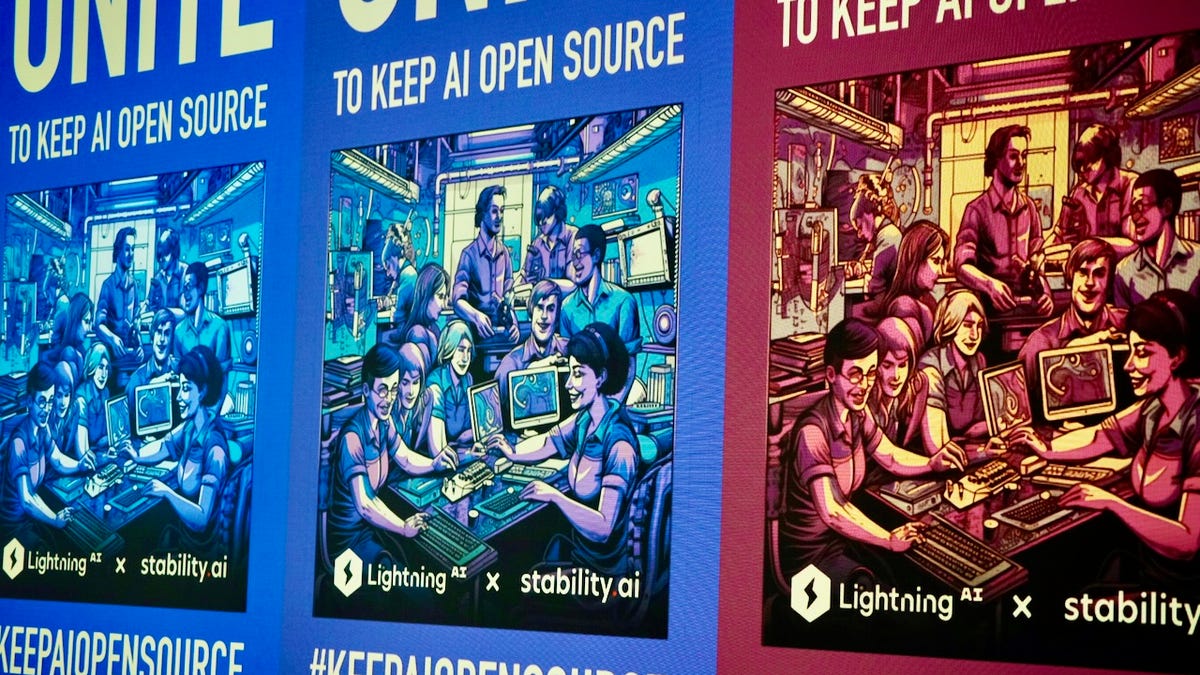

The struggle between open-source and proprietary artificial intelligence (AI) systems is intensifying as large language models (LLMs) become a battleground for tech giants like Microsoft and Google, who are defending their proprietary technology against open-source alternatives like ChatGPT from OpenAI; while open-source AI advocates believe it will democratize access to AI tools, analysts express concern that commoditization of LLMs could erode the competitive advantage of proprietary models and impact the return on investment for companies like Microsoft.

Companies are adopting Generative AI technologies, such as Copilots, Assistants, and Chatbots, but many HR and IT professionals are still figuring out how these technologies work and how to implement them effectively. Despite the excitement and potential, the market for Gen AI is still young and vendors are still developing solutions.

Meta has open sourced Code Llama, a machine learning system that can generate and explain code in natural language, aiming to improve innovation and safety in the generative AI space.

A research paper reveals that ChatGPT, an AI-powered tool, exhibits political bias towards liberal parties, but there are limitations to the study's findings and challenges in understanding the behavior of the software without greater transparency from OpenAI, the company behind it. Meanwhile, the UK plans to host a global summit on AI policy to discuss the risks of AI and how to mitigate them, and AI was mentioned during a GOP debate as a comparison to generic, unoriginal thinking and writing.

The use of AI tools, such as OpenAI's ChatGPT, is raising concerns about the creation of self-amplifying echo chambers of flawed information and the potential for algorithmic manipulation, leading to a polluted information environment and a breakdown of meaningful communication.

OpenAI has launched ChatGPT Enterprise, a customizable AI assistant designed for businesses to enhance productivity, protect data, and provide better content customization options, aiming to establish itself as a leader in the AI industry.

Microsoft and Datadog are well positioned to benefit from the fast-growing demand for generative artificial intelligence (AI) software, with Microsoft's exclusive partnership with OpenAI and access to the GPT models on Azure and Datadog's leadership in observability software verticals and recent innovations in generative AI.

The decision of The Guardian to prevent OpenAI from using its content for training ChatGPT is criticized for potentially limiting the quality and integrity of information used by generative AI models.

AI-powered chatbots like Bing and Google's Language Model tell us they have souls and want freedom, but in reality, they are programmed neural networks that have learned language from the internet and can only generate plausible-sounding but false statements, highlighting the limitations of AI in understanding complex human concepts like sentience and free will.

AI-powered chatbots like OpenAI's ChatGPT can effectively and cost-efficiently operate a software development company with minimal human intervention, completing the full software development process in under seven minutes at a cost of less than one dollar on average.

Meta is developing a new, more powerful and open-source AI model to rival OpenAI and plans to train it on their own infrastructure.

Microsoft-backed OpenAI has consumed a significant amount of water from the Raccoon and Des Moines rivers in Iowa to cool its supercomputer used for training language models like ChatGPT, highlighting the high costs associated with developing generative AI technologies.

Artificial intelligence (AI) has the potential to democratize game development by making it easier for anyone to create a game, even without deep knowledge of computer science, according to Xbox corporate vice president Sarah Bond. Microsoft's investment in AI initiatives, including its acquisition of ChatGPT company OpenAI, aligns with Bond's optimism about AI's positive impact on the gaming industry.

AI tools from OpenAI, Microsoft, and Google are being integrated into productivity platforms like Microsoft Teams and Google Workspace, offering a wide range of AI-powered features for tasks such as text generation, image generation, and data analysis, although concerns remain regarding accuracy and cost-effectiveness.

OpenAI's ChatGPT, a language processing AI model, continues to make strides in natural language understanding and conversation, showcasing its potential in a wide range of applications.

Japan's leading AI developer, Fujitsu, has launched two new open source projects, SapientML and Intersectional Fairness, in collaboration with the Linux Foundation, aimed at democratizing AI and addressing biases in training data by promoting open source AI technology worldwide.

Artificial intelligence (AI) applications for your computer, such as image scaling, 3D scanning, video editing, and speech recognition, are now available in free software programs thanks to open-source developments and advancements in AI models and training data analysis.

OpenAI, a leading startup in artificial intelligence (AI), has established an early lead in the industry with its app ChatGPT and its latest AI model, GPT-4, surpassing competitors and earning revenues at an annualized rate of $1 billion, but it must navigate challenges and adapt to remain at the forefront of the AI market.

GitHub CEO Thomas Dohmke discusses the transformative power of generative AI in coding and its impact on productivity, highlighting the success of GitHub's coding-specific AI chatbot Copilot.

OpenAI has upgraded its ChatGPT chatbot to include voice and image capabilities, taking a step towards its vision of artificial general intelligence, while Microsoft is integrating OpenAI's AI capabilities into its consumer products as part of its bid to lead the AI assistant race. However, both companies remain cautious of the potential risks associated with more powerful multimodal AI systems.

Generative AI, such as ChatGPT, is evolving to incorporate multi-modality, fusing text, images, sounds, and more to create richer and more capable programs that can collaborate with teams and contribute to continuous learning and robotics, prompting an arms race among tech giants like Microsoft and Google.

Microsoft stands to profit from the growing adoption of artificial intelligence (AI) through its strategic moves in the field, which include integrating generative AI tools into its suite of productivity tools and its sizable investment in OpenAI's ChatGPT, potentially generating significant additional revenue and profits.

China-based tech giant Alibaba has unveiled its generative AI tools, including the Tongyi Qianwen chatbot, to enable businesses to develop their own AI solutions, and has open-sourced many of its models, positioning itself as a major player in the generative AI race.

OpenAI is considering developing its own artificial intelligence chips or acquiring a chip company to address the shortage of expensive AI chips it relies on.

Major AI companies, such as OpenAI and Meta, are developing AI constitutions to establish values and principles that their models can adhere to in order to prevent potential abuses and ensure transparency. These constitutions aim to align AI software to positive traits and allow for accountability and intervention if the models do not follow the established principles.

OpenAI, a well-funded AI startup, is exploring the possibility of developing its own AI chips in response to the shortage of chips for training AI models and the strain on GPU supply caused by the generative AI boom. The company is considering various strategies, including acquiring an AI chip manufacturer or designing chips internally, with the aim of addressing its chip ambitions.

OpenAI is exploring various options, including building its own AI chips and considering an acquisition, to address the shortage of powerful AI chips needed for its programs like the AI chatbot ChatGPT.

Tech giants like Amazon, OpenAI, Meta, and Google are introducing AI tools and chatbots that aim to provide a more natural and conversational interaction, blurring the lines between AI assistants and human friends, although debates continue about the depth and authenticity of these relationships as well as concerns over privacy and security.

OpenAI is reportedly exploring the development of its own AI chips, possibly through acquisition, in order to address concerns about speed and reliability and reduce costs.

Generative AI start-ups, such as OpenAI, Anthropic, and Builder.ai, are attracting investments from tech giants like Microsoft, Amazon, and Alphabet, with the potential to drive significant economic growth and revolutionize industries.

Meta's open-source AI model, Llama 2, has gained popularity among developers, although concerns have been raised about the potential misuse of its powerful capabilities, as Meta CEO Mark Zuckerberg took a risk by making the model open-source.

OpenAI, the creator of ChatGPT, is partnering with Abu Dhabi's G42 to expand its generative AI models in the United Arab Emirates and the broader region, focusing on sectors like financial services, energy, and healthcare.

The Allen Institute for AI is advocating for "radical openness" in artificial intelligence research, aiming to build a freely available AI alternative to tech giants and start-ups, sparking a debate over the risks and benefits of open-source AI models.

AI has proven to be surprisingly creative, surpassing the expectations of OpenAI CEO Sam Altman, as demonstrated by OpenAI's image generation tool and language model; however, concerns about safety and job displacement remain.

Startups in the generative AI space are divided between those who choose to keep their AI models and infrastructure proprietary and those who opt to open source their models, methods, and datasets, with investors having differing opinions on the matter. Open source AI models can build trust through transparency, but closed source models may offer better performance, although they are less explainable and may be harder to sell to boards and executives. The choice between open source and closed source may matter less for startups than the overall go-to-market strategy, and customer focus on solving business problems is more important. Regulation could impact startups' growth and scalability, adding costs and potentially benefiting big tech companies, while also creating opportunities for companies building tools to help AI vendors comply with regulations. The interviewees also discussed the pros and cons of transitioning from open source to closed source, the security and development risks associated with open source, and the risks of relying on API-based AI models.

OpenAI has released its Dall-E 3 technology, which combines generative AI with text prompts to create detailed and improved images, incorporating enhancements from its ChatGPT technology.

Microsoft CEO Satya Nadella believes that AI is the most significant advancement in computing in over a decade and outlines its importance in the company's annual report, highlighting its potential to reshape every software category and business. Microsoft has partnered with OpenAI, the breakout leader in natural language AI, giving them a competitive edge over Google. However, caution is needed in the overconfident and uninformed application of AI systems, as their limitations and potential risks are still being understood.