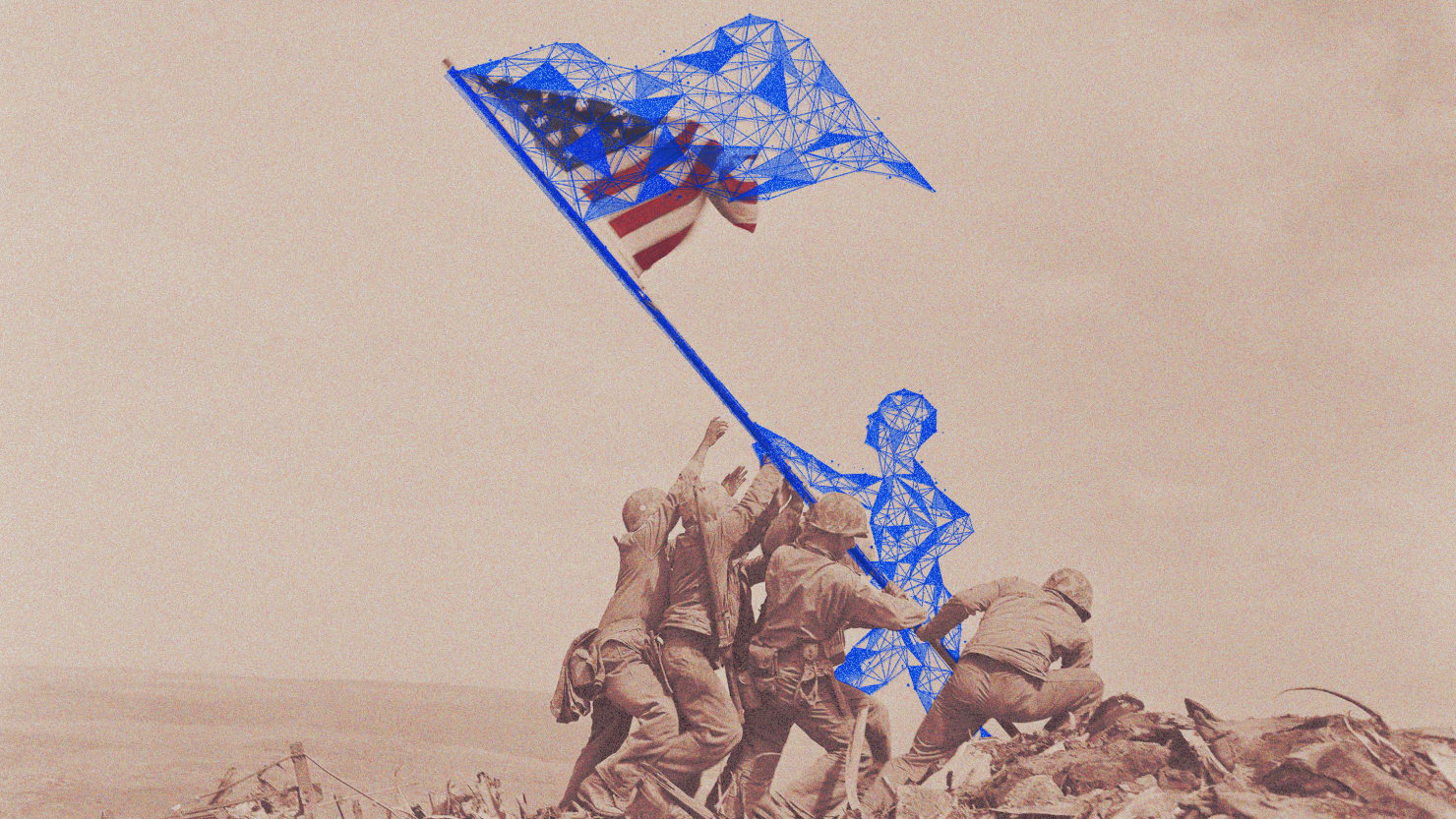

Army cyber leaders are exploring the potential of artificial intelligence (AI) for future operations, but are cautious about the timeframe for its implementation, as they focus on understanding the aggregation of data and the confidence in externally derived datasets, according to Maj. Gen. Paul Stanton, commander of the Cyber Center of Excellence. The Army is also looking at the development of an AI "bill of materials" to catch up with China in the AI race and preparing soldiers for electronic warfare in the future battlefield.

AI in warfare raises ethical questions due to the potential for catastrophic failures, abuse, security vulnerabilities, privacy issues, biases, and accountability challenges, with companies facing little to no consequences, while the use of generative AI tools in administrative and business processes offers a more stable and low-risk application. Additionally, regulators are concerned about AI's inaccurate emotion recognition capabilities and its potential for social control.

Hollywood studios are considering the use of generative AI tools, such as ChatGPT, to assist in screenwriting, but concerns remain regarding copyright protection for works solely created by AI, as they currently are not copyrightable.

Companies are adopting Generative AI technologies, such as Copilots, Assistants, and Chatbots, but many HR and IT professionals are still figuring out how these technologies work and how to implement them effectively. Despite the excitement and potential, the market for Gen AI is still young and vendors are still developing solutions.

The Department of Defense lacks standardized guidance for acquiring and implementing artificial intelligence (AI) at speed, hindering the adoption of cutting-edge technology by warfighters and leaving a gap between US capabilities and those of adversaries like China. The Pentagon needs to create agile acquisition pathways and universal standards for AI to accelerate its integration into the defense enterprise.

China's People's Liberation Army aims to be a leader in generative artificial intelligence for military applications, but faces challenges including data limitations, political restrictions, and a need for trust in the technology. Despite these hurdles, China is at a similar level or even ahead of the US in some areas of AI development and views AI as a crucial component of its national strategy.

The Pentagon can learn valuable lessons about harnessing AI from the historical development of carrier aviation, including the importance of realistic experimentation, navigating bureaucracy effectively, and empowering visionary personnel, in order to fully grasp the scope of AI's potential military impact.

Salesforce has released an AI Acceptable Use Policy that outlines the restrictions on the use of its generative AI products, including prohibiting their use for weapons development, adult content, profiling based on protected characteristics, medical or legal advice, and more. The policy emphasizes the need for responsible innovation and sets clear ethical guidelines for the use of AI.

AI technology, specifically generative AI, is being embraced by the creative side of film and TV production to augment the work of artists and improve the creative process, rather than replacing them. Examples include the use of procedural generation and style transfer in animation techniques and the acceleration of dialogue and collaboration between artists and directors. However, concerns remain about the potential for AI to replace artists and the need for informed decision-making to ensure that AI is used responsibly.

Generative AI tools are being misused by cybercriminals to drive a surge in cyberattacks, according to a report from Check Point Research, leading to an 8% spike in global cyberattacks in the second quarter of the year and making attackers more productive.

Artificial intelligence (AI) is seen as a tool that can inspire and collaborate with human creatives in the movie and TV industry, but concerns remain about copyright and ethical issues, according to Greg Harrison, chief creative officer at MOCEAN. Although AI has potential for visual brainstorming and automation of non-creative tasks, it should be used cautiously and in a way that values human creativity and culture.

The use of AI tools, such as OpenAI's ChatGPT, is raising concerns about the creation of self-amplifying echo chambers of flawed information and the potential for algorithmic manipulation, leading to a polluted information environment and a breakdown of meaningful communication.

Utah educators are concerned about the use of generative AI, such as ChatGPT, in classrooms, as it can create original content and potentially be used for cheating, leading to discussions on developing policies for AI use in schools.

Generative AI tools like ChatGPT could potentially change the nature of certain jobs, breaking them down into smaller, less skilled roles and potentially leading to job degradation and lower pay, while also creating new job opportunities. The impact of generative AI on the workforce is uncertain, but it is important for workers to advocate for better conditions and be prepared for potential changes.

Generative AI, a technology with the potential to significantly boost productivity and add trillions of dollars to the global economy, is still in the early stages of adoption and widespread use at many companies is still years away due to concerns about data security, accuracy, and economic implications.

Generative artificial intelligence, such as ChatGPT and Stable Diffusion, raises legal questions related to data use, copyrights, patents, and privacy, leading to lawsuits and uncertainties that could slow down technology adoption.

Generative AI has revolutionized various sectors by producing novel content, but it also raises concerns around biases, intellectual property rights, and security risks. Debates on copyrightability and ownership of AI-generated content need to be resolved, and existing laws should be modified to address the risks associated with generative AI.

Generative AI is being used to create misinformation that is increasingly difficult to distinguish from reality, posing significant threats such as manipulating public opinion, disrupting democratic processes, and eroding trust, with experts advising skepticism, attention to detail, and not sharing potentially AI-generated content to combat this issue.

AI technology is making it easier and cheaper to produce mass-scale propaganda campaigns and disinformation, using generative AI tools to create convincing articles, tweets, and even journalist profiles, raising concerns about the spread of AI-powered fake content and the need for mitigation strategies.

Generative artificial intelligence (AI) tools, such as ChatGPT, have the potential to supercharge disinformation campaigns in the 2024 elections, increasing the quantity, quality, and personalization of false information distributed to voters, but there are limitations to their effectiveness and platforms are working to mitigate the risks.

"Generative" AI is being explored in various fields such as healthcare and art, but there are concerns regarding privacy and theft that need to be addressed.

Generative AI tools are causing concerns in the tech industry as they produce unreliable and low-quality content on the web, leading to issues of authorship, incorrect information, and potential information crisis.

The decision of The Guardian to prevent OpenAI from using its content for training ChatGPT is criticized for potentially limiting the quality and integrity of information used by generative AI models.

Almost a quarter of organizations are currently using AI in software development, and the majority of them are planning to continue implementing such systems, according to a survey from GitLab. The use of AI in software development is seen as essential to avoid falling behind, with high confidence reported by those already using AI tools. The top use cases for AI in software development include natural-language chatbots, automated test generation, and code change summaries, among others. Concerns among practitioners include potential security vulnerabilities and intellectual property issues associated with AI-generated code, as well as fears of job replacement. Training and verification by human developers are seen as crucial aspects of AI implementation.

Using AI tools like ChatGPT to write smart contracts and build cryptocurrency projects can lead to more problems, bugs, and attack vectors, according to CertiK's security chief, Kang Li, who believes that inexperienced programmers may create catastrophic design flaws and vulnerabilities. Additionally, AI tools are becoming more successful at social engineering attacks, making it harder to distinguish between AI-generated and human-generated messages.

Generative AI is being explored for augmenting infrastructure as code tools, with developers considering using AI models to analyze IT through logfiles and potentially recommend infrastructure recipes needed to execute code. However, building complex AI tools like interactive tutors is harder and more expensive, and securing funding for big AI investments can be challenging.

Generative artificial intelligence, such as ChatGPT, is increasingly being used by students and professors in education, with some finding it helpful for tasks like outlining papers, while others are concerned about the potential for cheating and the quality of AI-generated responses.

Eight additional U.S.-based AI developers, including NVIDIA, Scale AI, and Cohere, have pledged to develop generative AI tools responsibly, joining a growing list of companies committed to the safe and trustworthy deployment of AI.

The Delhi High Court has ruled that ChatGPT, a generative artificial intelligence tool, cannot be used to settle legal issues due to varying responses depending on how queries are framed, highlighting the potential for biased answers; however, experts suggest that AI can still assist in administrative tasks within the adjudication process.

Generative AI is a form of artificial intelligence that can create various forms of content, such as images, text, music, and virtual worlds, by learning patterns and rules from existing data, and its emergence raises ethical questions regarding authenticity, intellectual property, and job displacement.

OpenAI has released DALL-E 3, an AI image synthesis model integrated with ChatGPT, that can generate images based on complex descriptions and handle in-image text generation without the need for manual engineering.

Generative AI, which includes language models like ChatGPT and image generators like DALL·E 2, has led to the emergence of "digital necromancy," raising the ethical concern of communicating with digital simulations of the deceased, although it can be seen as an extension of existing practices of remembrance and commemoration rather than a disruptive force.

Open source and artificial intelligence have a deep connection, as open-source projects and tools have played a crucial role in the development of modern AI, including popular AI generative models like ChatGPT and Llama 2.