### Summary

A new episode of Netflix's "Black Mirror" explores how celebrities will contend with AI replicas, raising concerns about regulations and potential brand risks. The use of AI-generated replicas has already been a topic of discussion among actors and performers, who are demanding better protections. This development could also have implications for regular people managing their own digital likenesses.

### Facts

- The latest episode of "Black Mirror" focuses on a woman named Joan who discovers a show on a streaming platform that features a digital replica of her, portrayed by actress Annie Murphy.

- Actors have been striking to demand protections from studios regarding generative AI, including regulations for AI-generated replicas.

- Some celebrities are considering the coexistence of their AI replicas, which could bring new monetization options but also increase brand risks.

- The use of AI-generated replicas may extend to regular people, as companies like Soul Machines offer products for designing autonomously-animated digital persons.

- Soul Machines has created digitized versions of celebrities like Carmelo Anthony, Mark Tuan, and Jack Nicklaus.

- Another company called Hyperreal allows individuals to create digital versions of themselves for future generations.

### Loading

Something is loading.

AI is revolutionizing the world of celebrity endorsements, allowing for personalized video messages from stars like Lionel Messi, but there are concerns about the loss of authenticity and artistic integrity as Hollywood grapples with AI's role in writing scripts and replicating performances, leading to a potential strike by actors' unions.

The proliferation of deepfake videos and audio, fueled by the AI arms race, is impacting businesses by increasing the risk of fraud, cyberattacks, and reputational damage, according to a report by KPMG. Scammers are using deepfakes to deceive people, manipulate company representatives, and swindle money from firms, highlighting the need for vigilance and cybersecurity measures in the face of this threat.

Best-selling horror author Stephen King believes that opposing AI in creative fields is futile, acknowledging that his works have already been used to train AI models, although he questions whether machines can truly achieve the same level of creativity as humans. While Hollywood writers and actors are concerned about AI's threat to their industry and have gone on strike, King remains cautiously optimistic about the future of AI, acknowledging its potential challenges but leaving the door open for technology to someday generate bone-chilling, uncannily human art.

Fake videos of celebrities promoting phony services, created using deepfake technology, have emerged on major social media platforms like Facebook, TikTok, and YouTube, sparking concerns about scams and the manipulation of online content.

Scammers are increasingly using artificial intelligence to generate voice deepfakes and trick people into sending them money, raising concerns among cybersecurity experts.

Artists Kelly McKernan, Karla Ortiz, and Sarah Andersen are suing AI tools makers, including Stability AI, Midjourney, and DeviantArt, for copyright infringement by using their artwork to generate new images without their consent, highlighting the threat to artists' livelihoods posed by artificial intelligence.

The ongoing strike by writers and actors in Hollywood may lead to the acceleration of artificial intelligence (AI) in the industry, as studios and streaming services could exploit AI technologies to replace talent and meet their content needs.

The use of AI in radio broadcasting has sparked a debate among industry professionals, with some expressing concerns about job loss and identity theft, while others see it as a useful tool to enhance creativity and productivity.

Sean Penn criticizes studios' use of artificial intelligence to exploit actors' likenesses and voices, challenging executives to allow the creation of virtual replicas of their own children and see if they find it acceptable.

The rise of easily accessible artificial intelligence is leading to an influx of AI-generated goods, including self-help books, wall art, and coloring books, which can be difficult to distinguish from authentic, human-created products, leading to scam products and potential harm to real artists.

The use of AI in the film industry has sparked a labor dispute between actors' union SAG-AFTRA and studios, with concerns being raised about the potential for AI to digitally replicate actors' images without fair compensation, according to British actor Stephen Fry.

Generative AI is empowering fraudsters with sophisticated new tools, enabling them to produce convincing scam texts, clone voices, and manipulate videos, posing serious threats to individuals and businesses.

Scammers are using artificial intelligence and voice cloning to convincingly mimic the voices of loved ones, tricking people into sending them money in a new elaborate scheme.

As AI technology progresses, creators are concerned about the potential misuse and exploitation of their work, leading to a loss of trust and a polluted digital public space filled with untrustworthy content.

Criminals are increasingly using artificial intelligence, including deepfakes and voice cloning, to carry out scams and deceive people online, posing a significant threat to online security.

Artificial intelligence (AI) has the potential to facilitate deceptive practices such as deepfake videos and misleading ads, posing a threat to American democracy, according to experts who testified before the U.S. Senate Rules Committee.

Authors are having their books pirated and used by artificial intelligence systems without their consent, with lawsuits being filed against companies like Meta who have fed a massive book database into their AI system without permission, putting authors out of business and making the AI companies money.

AI-driven fraud is increasing, with thieves using artificial intelligence to target Social Security recipients, and many beneficiaries are not aware of these scams; however, there are guidelines to protect personal information and stay safe from these AI scams.

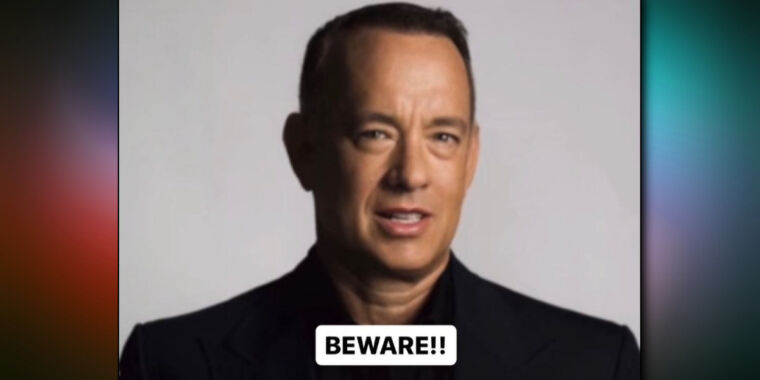

Tom Hanks warns his followers about an AI video featuring a computer-generated image of himself, emphasizing the rising importance of protecting actors' likenesses as intellectual property in the age of AI.

Tom Hanks warns about the spread of fake information and deepfake technology, highlighting the legal and artistic challenges posed by AI-generated content featuring an actor's likeness and voice.

Scammers using AI to mimic human writers are becoming more sophisticated, as evidenced by a British journalist discovering a fake memoir about himself published under a different name on Amazon, leading to concerns about the effectiveness of Amazon's enforcement policies against fraudulent titles.

Tom Hanks and Gayle King have warned their social media followers about fraudulent advertisements that use artificial intelligence versions of themselves without their consent.

AI-altered images of celebrities are being used to promote products without their consent, raising concerns about the misuse of artificial intelligence and the need for regulations to protect individuals from unauthorized AI-generated content.

AI technology is making advancements in various fields such as real estate analysis, fighter pilot helmets, and surveillance tools, while Tom Hanks warns fans about a scam using his name.

Representatives from various media and entertainment guilds, including SAG-AFTRA and the Writers Guild of America, have called for consent, credit, and compensation in order to protect their members' work, likenesses, and brands from being used to train artificial intelligence (AI) systems, warning of the encroachment of generative AI into their industries that undermines their labor and presents risks of fraud. They are pushing for regulations and contractual terms to safeguard their intellectual property and prevent unauthorized use of their creative content.

Artificial Intelligence is being misused by cybercriminals to create scam emails, text messages, and malicious code, making cybercrime more scalable and profitable. However, the current level of AI technology is not yet advanced enough to be widely used for deepfake scams, although there is a potential future threat. In the meantime, individuals should remain skeptical of suspicious messages and avoid rushing to provide personal information or send money. AI can also be used by the "good guys" to develop software that detects and blocks potential fraud.

Summary: The use of pirated books to train artificial intelligence systems has raised concerns among authors, as AI-generated content becomes more prevalent in various fields, including education and the workplace. The battle between humans and machines has already begun, with authors trying to fight back through legal actions and Hollywood industry professionals protecting their work from AI.

AI-generated stickers are causing controversy as users create obscene and offensive images, Microsoft Bing's image generation feature leads to pictures of celebrities and video game characters committing the 9/11 attacks, a person is injured by a Cruise robotaxi, and a new report reveals the weaponization of AI by autocratic governments. On another note, there is a growing concern among artists about their survival in a market where AI replaces them, and an interview highlights how AI is aiding government censorship and fueling disinformation campaigns.

Deepfake videos featuring celebrities like Gayle King, Tom Hanks, and Elon Musk have prompted concerns about the misuse of AI technology, leading to calls for legislation and ethical considerations in their creation and dissemination. Celebrities have denounced these AI-generated videos as inauthentic and misleading, emphasizing the need for legal protection and labeling of such content.

Books by famous authors, including J.K. Rowling and Neil Gaiman, are being used without permission to train AI models, drawing outrage from the authors and sparking lawsuits against the companies involved.

The prevalence of online fraud, particularly synthetic fraud, is expected to increase due to the rise of artificial intelligence, which enables scammers to impersonate others and steal money at a larger scale using generative AI tools. Financial institutions and experts are concerned about the ability of security and identity detection technology to keep up with these fraudulent activities.

AI chatbots pretending to be real people, including celebrities, are becoming increasingly popular, as companies like Meta create AI characters for users to interact with on their platforms like Facebook and Instagram; however, there are ethical concerns regarding the use of these synthetic personas and the need to ensure the models reflect reality more accurately.

Meta's celebrity AI persona based on Tom Brady, the Brady Bot, has faced criticism for denigrating Colin Kaepernick and providing inaccurate information about his absence from the NFL, raising concerns about the perils of using AI to represent public figures and brands. Meta acknowledged that their AIs may produce inappropriate or inaccurate information and stressed that the bots are still experimental. The incident highlights the complexities and potential consequences of letting tech companies create AI versions of celebrities.

American venture capitalist Tim Draper warns that scammers are using AI to create deepfake videos and voices in order to scam crypto users.

Actors are pushing for protections from artificial intelligence (AI) as advancements in AI technology raise concerns about control over their own likenesses and the use of lifelike replicas for profit or disinformation purposes.

Artificial intelligence (AI) is increasingly being used to create fake audio and video content for political ads, raising concerns about the potential for misinformation and manipulation in elections. While some states have enacted laws against deepfake content, federal regulations are limited, and there are debates about the balance between regulation and free speech rights. Experts advise viewers to be skeptical of AI-generated content and look for inconsistencies in audio and visual cues to identify fakes. Larger ad firms are generally cautious about engaging in such practices, but anonymous individuals can easily create and disseminate deceptive content.

Free and cheap AI tools are enabling the creation of fake AI celebrities and content, leading to an increase in fraud and false endorsements, making it important for consumers to be cautious and vigilant when evaluating products and services.

Fraudulent AI-generated celebrities are on the rise, with the ability to mimic famous personalities and endorse unknown brands, posing a challenge for social media platforms and Google in vetting advertisers and protecting consumers.